ethrex

ethrex is a minimalist, stable, modular, fast, and ZK native Ethereum client built from the ground up with zero-knowledge proving in mind. Whether you're running an L1 node or building an L2, ethrex provides the foundation for verifiable Ethereum execution.

Why ethrex?

| Feature | Description |

|---|---|

| Minimalist | ~100k lines of Rust vs 500k+ in mature clients. Less code means fewer bugs and faster iteration. |

| Multi-prover | Support for SP1, RISC Zero, ZisK, OpenVM, and TEEs. Choose the proving backend that fits your needs. |

| Unified L1/L2 | Same execution client for mainnet nodes and L2 rollups. Consistent behavior across layers. |

| ZK-Optimized | Data structures and algorithms designed to minimize proving overhead from day one. |

zkVM Integrations

ethrex integrates with multiple zero-knowledge virtual machines, giving you flexibility in how you prove Ethereum execution.

| zkVM | Organization | L1 Support | L2 Support | Status |

|---|---|---|---|---|

| SP1 | Succinct | ✓ | ✓ | Production |

| RISC Zero | RISC Zero | ✓ | ✓ | Production |

| ZisK | Polygon | ✓ | Planned | Experimental |

| OpenVM | Axiom | ✓ | Planned | Experimental |

| TEE (TDX) | Intel | — | ✓ | Production |

tip

For L2 deployments, you can run multiple provers simultaneously for redundancy. See multi-prover deployment.

Quick Start

Run an L1 node:

# Install ethrex

cargo install ethrex

# Start syncing mainnet

ethrex --network mainnet

Deploy an L2:

# See the full deployment guide

# https://docs.ethrex.xyz/l2/deployment/overview.html

Architecture Highlights

ethrex's architecture is optimized for both traditional execution and ZK proving:

- Stateless execution - Block execution can run with only the necessary witness data, enabling efficient proving

- Modular VM (LEVM) - Our EVM implementation is designed for clarity and easy auditing

- Optimized tries - Merkle Patricia Trie operations are tuned to reduce zkVM cycle counts

- Precompile patches - Cryptographic operations use zkVM-accelerated implementations when available

Learn More

- zkVM Integrations - Detailed guide to supported proving backends

- Benchmark Comparisons - Performance data vs other implementations

- Case Studies - How teams are using ethrex

- Architecture Overview - Deep dive into ethrex internals

Get Involved

- GitHub - Star us, open issues, contribute

- Telegram - Join the community chat

- Blog - Technical deep dives and updates

Getting started

Ethrex is a minimalist, stable, modular and fast implementation of the Ethereum protocol in Rust. The client supports running in two different modes:

- ethrex L1 - As a regular Ethereum execution client

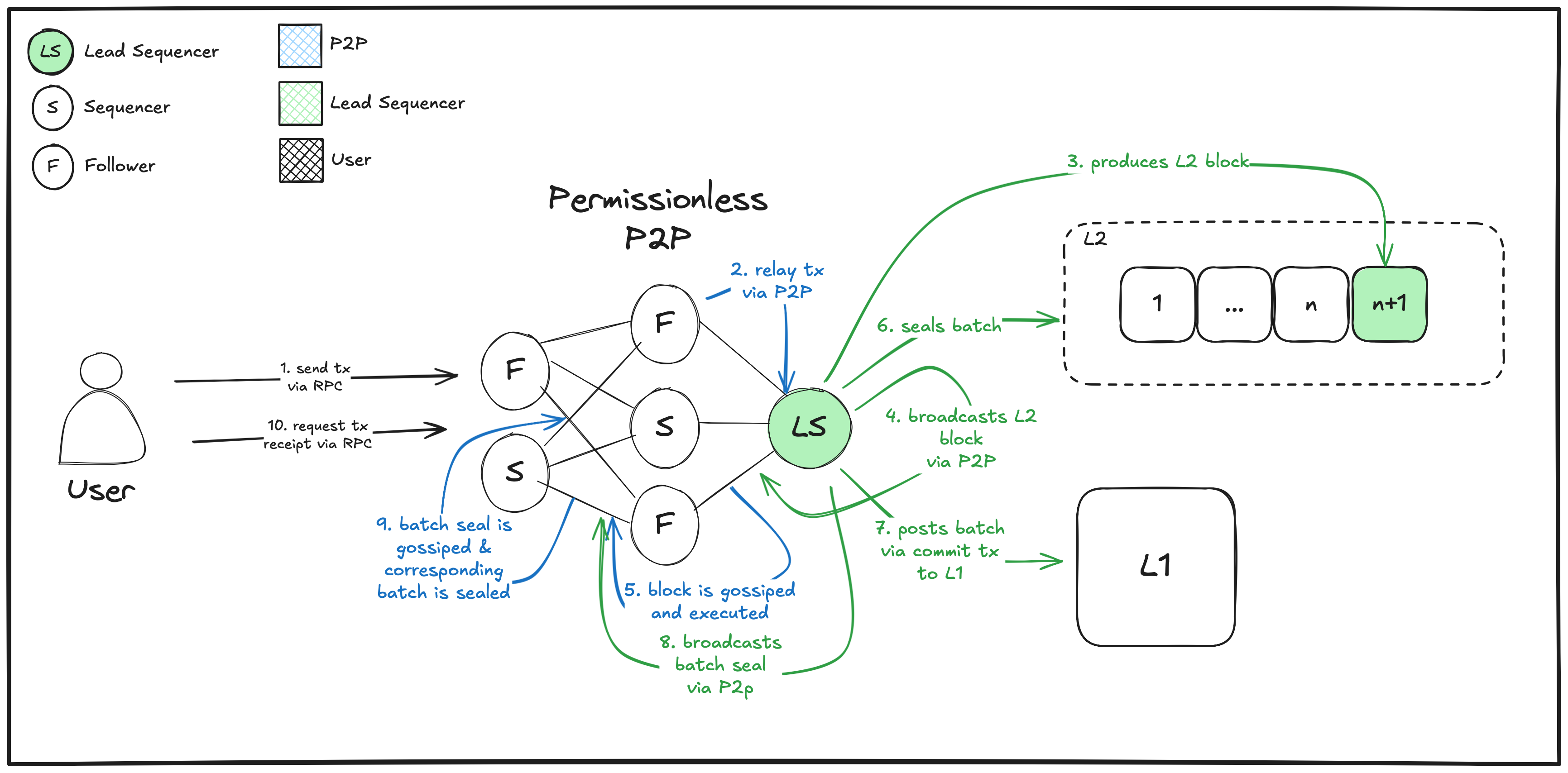

- ethrex L2 - As a multi-prover ZK-Rollup (supporting SP1, RISC Zero and TEEs), where block execution is proven and the proof sent to an L1 network for verification, thus inheriting the L1's security. Support for based sequencing is currently in the works.

Quickstart L1

caution

Before starting, ensure your hardware meets the hardware requirements.

Follow these steps to sync an ethrex node on the Hoodi testnet.

MacOS

Install ethrex and lighthouse:

# create secrets directory and jwt secret

mkdir -p ethereum/secrets/

cd ethereum/

openssl rand -hex 32 | tr -d "\n" | tee ./secrets/jwt.hex

# install lighthouse and ethrex

brew install lambdaclass/tap/ethrex

brew install lighthouse

On one terminal:

ethrex --authrpc.jwtsecret ./secrets/jwt.hex --network hoodi

and on another one:

lighthouse bn --network hoodi --execution-endpoint http://localhost:8551 --execution-jwt ./secrets/jwt.hex --checkpoint-sync-url https://hoodi.checkpoint.sigp.io --http

Linux x86

Install ethrex and lighthouse:

note

Go to https://github.com/sigp/lighthouse/releases/ and use the latest package there and replace that in the below commands

# create secrets directory and jwt secret

mkdir -p ethereum/secrets/

cd ethereum/

openssl rand -hex 32 | tr -d "\n" | tee ./secrets/jwt.hex

# install lighthouse and ethrex

curl -L https://github.com/lambdaclass/ethrex/releases/latest/download/ethrex-linux-x86_64 -o ethrex

chmod +x ethrex

curl -LO https://github.com/sigp/lighthouse/releases/download/v8.0.0/lighthouse-v8.0.0-x86_64-unknown-linux-gnu.tar.gz

tar -xvf lighthouse-v8.0.0-x86_64-unknown-linux-gnu.tar.gz

On one terminal:

./ethrex --authrpc.jwtsecret ./secrets/jwt.hex --network hoodi

and on another one:

./lighthouse bn --network hoodi --execution-endpoint http://localhost:8551 --execution-jwt ./secrets/jwt.hex --checkpoint-sync-url https://hoodi.checkpoint.sigp.io --http

For other CPU architectures, see the releases page.

Quickstart L2

Follow these steps to quickly launch a local L2 node. For advanced options and real deployments, see the links at the end.

MacOS

# install ethrex

brew install lambdaclass/tap/ethrex

ethrex l2 --dev

Linux x86

# install ethrex

curl -L https://github.com/lambdaclass/ethrex/releases/latest/download/ethrex-linux-x86_64 -o ethrex

chmod +x ethrex

./ethrex l2 --dev

For other CPU architectures, see the releases page.

Where to Start

-

Want to run ethrex in production as an execution client?

See Node operation for setup, configuration, monitoring, and best practices.

-

Interested in deploying your own L2?

See L2 rollup deployment for launching your own rollup, deploying contracts, and interacting with your L2.

-

Looking to contribute or develop?

Visit the Developer resources for local dev mode, testing, debugging, advanced CLI usage, and the CLI reference.

-

Want to understand how ethrex works?

Explore L1 fundamentals and L2 Architecture for deep dives into ethrex's design, sync modes, networking, and more.

Hardware Requirements

NOTE: The guidance in this document applies to running an L1 (Ethereum) node. L2 deployments (sequencers, provers and related infra) have different hardware profiles and operational requirements — see the "L2" section below for details.

Hardware requirements depend primarily on the network you're running — for example, Hoodi, Sepolia, or Mainnet.

General Recommendations

Across all networks, the following apply:

- Disk Type: Use high-performance NVMe SSDs. For multi-disk setups, software RAID 0 is recommended to maximize speed and capacity. Avoid hardware RAID, which can limit NVMe performance.

- RAM: Sufficient memory minimizes sync bottlenecks and improves stability under load.

- CPU: 4-8 Cores.

- x86-64 bit Processors must be compatible with the instruction set AVX2.

Disk and Memory Requirements by Network

| Network | Disk (Minimum) | Disk (Recommended) | RAM (Minimum) | RAM (Recommended) |

|---|---|---|---|---|

| Ethereum Mainnet | 500 GB | 1 TB | 32 GB | 64 GB |

| Ethereum Sepolia | 250 GB | 400 GB | 32 GB | 64 GB |

| Ethereum Hoodi | 60 GB | 100 GB | 32 GB | 64 GB |

L2

TBD

Installation

Ethrex is designed to run on Linux and macOS.

There are 4 supported methods to install ethrex:

After following the installation steps you should have a binary that can run an L1 client or a multi-prover ZK-rollup with support for SP1, RISC Zero and TEEs.

Install ethrex (binary distribution)

This guide explains how to quickly install the latest pre-built ethrex binary for your operating system.

Prerequisites

- curl (for downloading the binary)

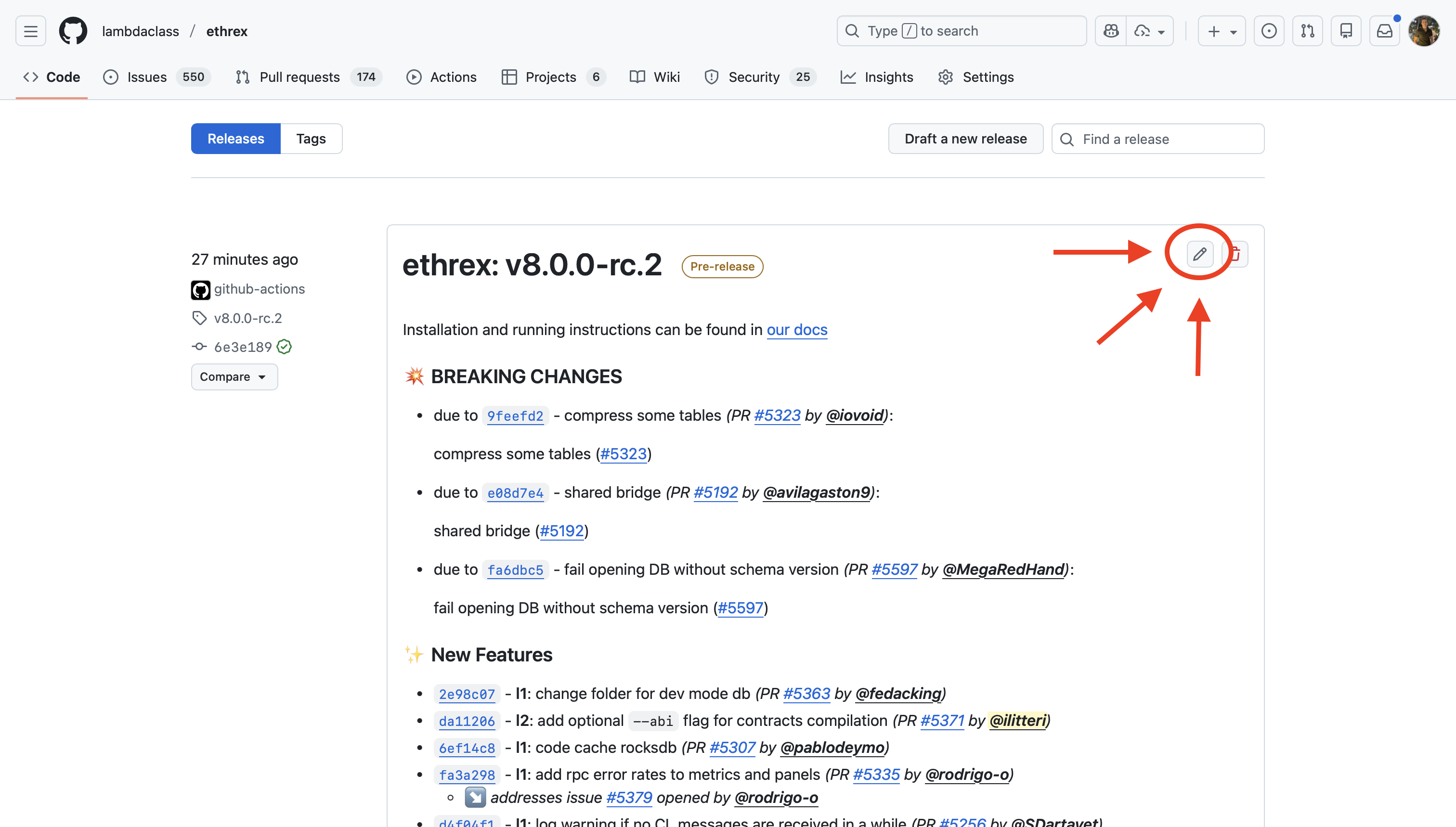

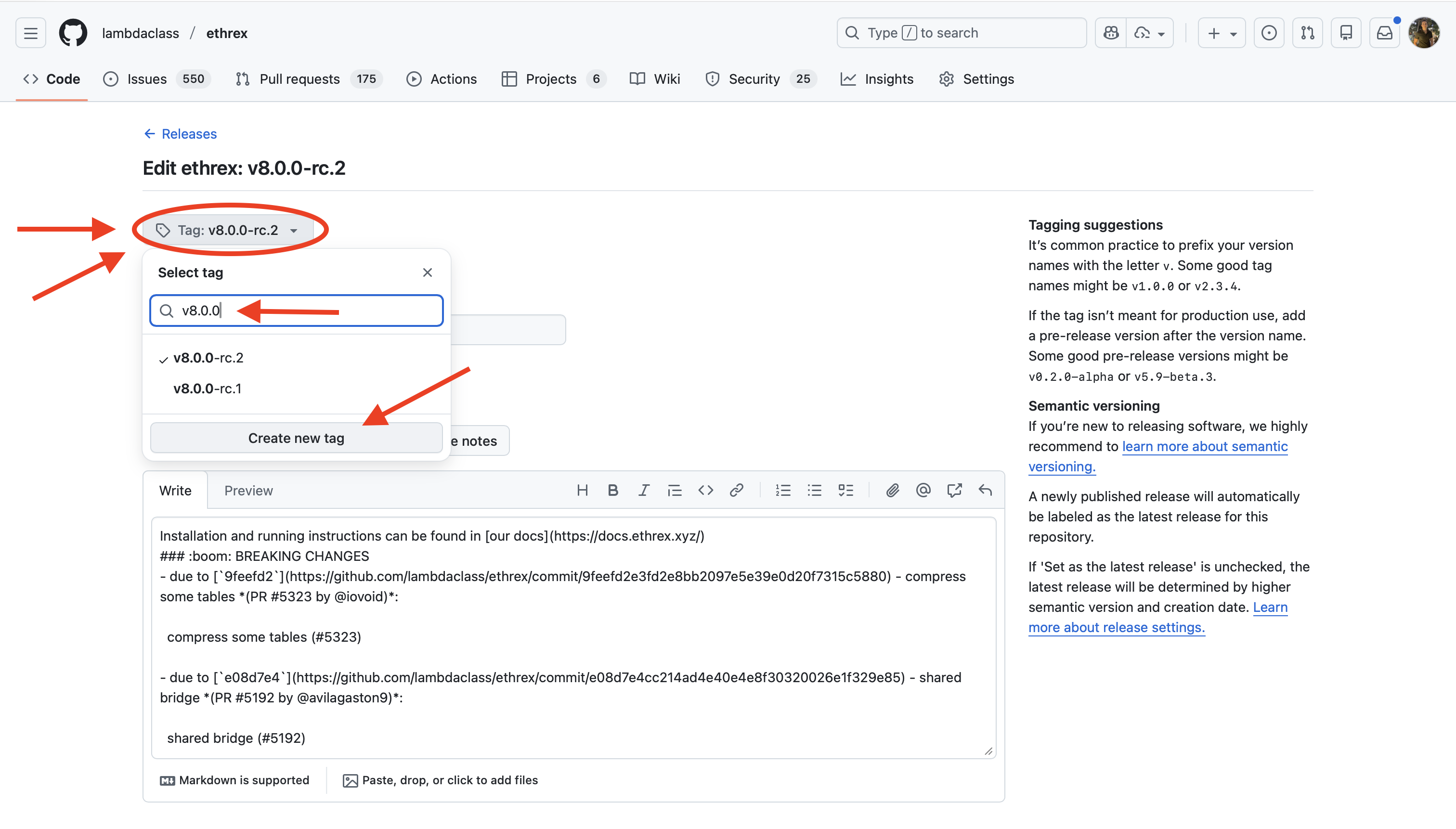

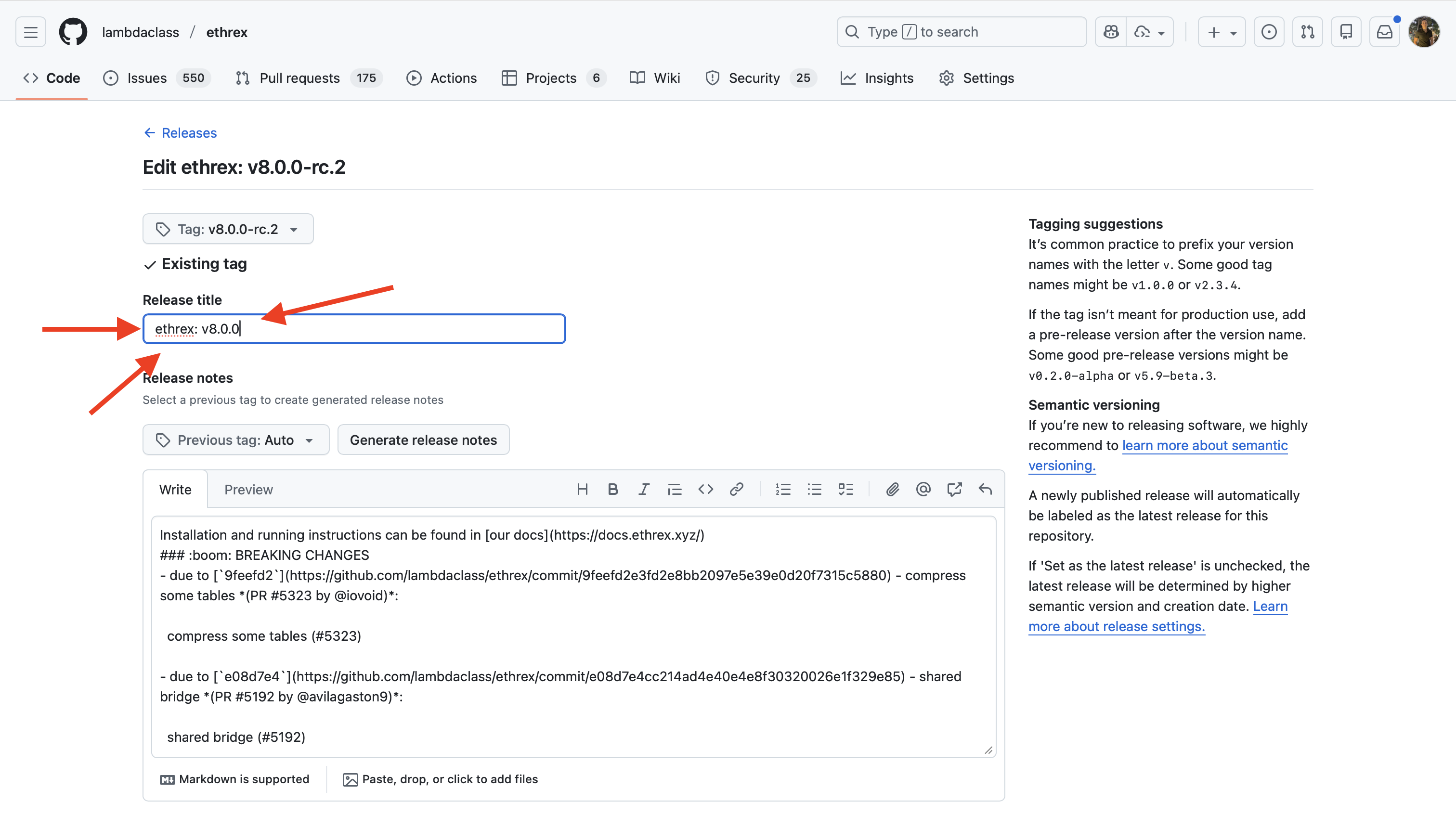

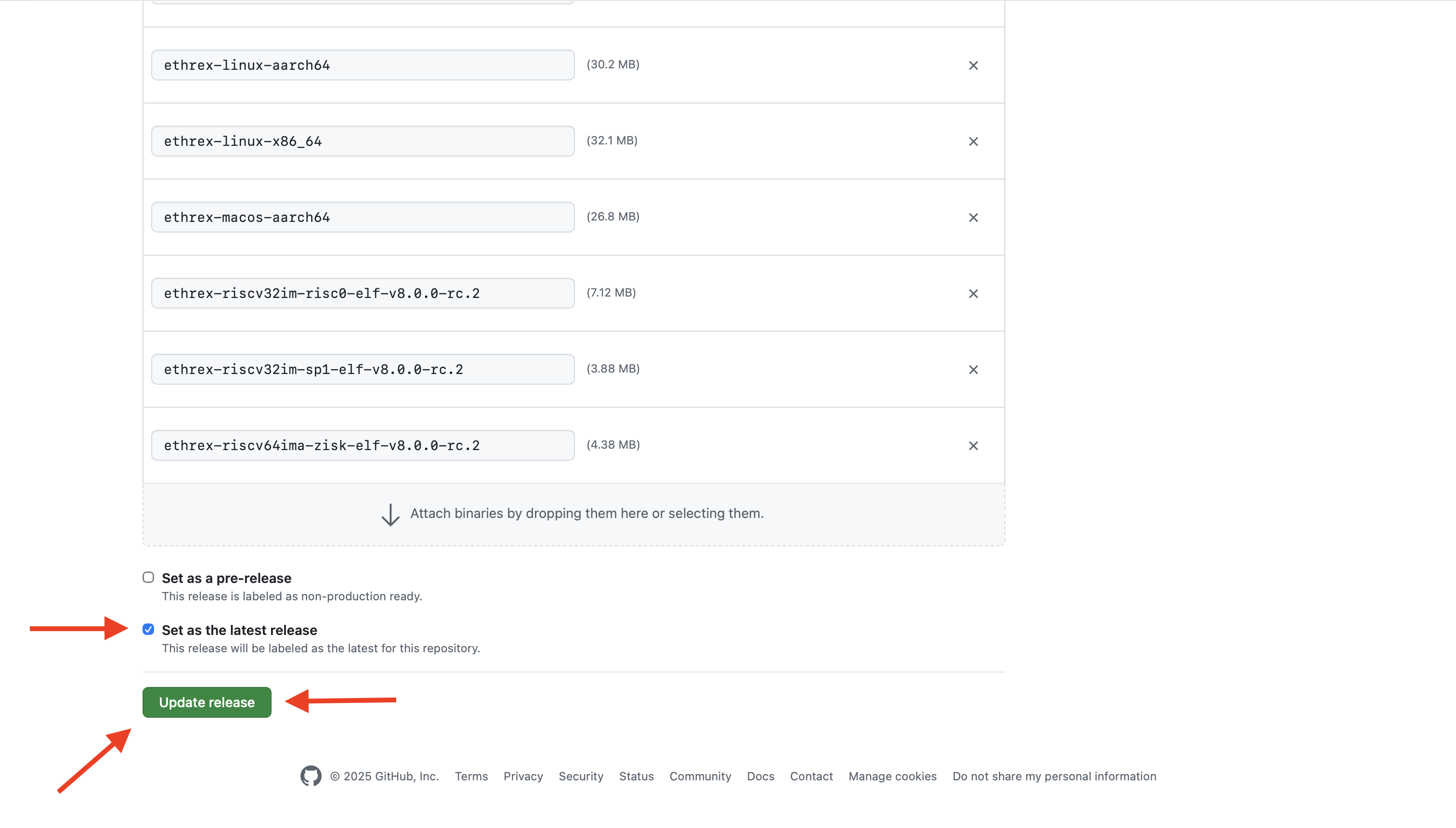

Download the latest release

Download the latest ethrex release for your OS from the GitHub Releases page.

Linux x86_64

curl -L https://github.com/lambdaclass/ethrex/releases/latest/download/ethrex-linux-x86_64 -o ethrex

Linux x86_64 with GPU support (for L2 prover)

If you want to run an L2 prover with GPU acceleration, download the GPU-enabled binary:

curl -L https://github.com/lambdaclass/ethrex/releases/latest/download/ethrex-linux-x86_64-gpu -o ethrex

Linux ARM (aarch64)

curl -L https://github.com/lambdaclass/ethrex/releases/latest/download/ethrex-linux-aarch64 -o ethrex

Linux ARM (aarch64) with GPU support (for L2 prover)

If you want to run an L2 prover with GPU acceleration, download the GPU-enabled binary:

curl -L https://github.com/lambdaclass/ethrex/releases/latest/download/ethrex-linux-aarch64-gpu -o ethrex

macOS (Apple Silicon, aarch64)

curl -L https://github.com/lambdaclass/ethrex/releases/latest/download/ethrex-macos-aarch64 -o ethrex

Set execution permissions

Make the binary executable:

chmod +x ethrex

(Optional) Move to a directory in your $PATH

To run ethrex from anywhere, move it to a directory in your $PATH (e.g., /usr/local/bin):

sudo mv ethrex /usr/local/bin/

Verify the installation

Check that Ethrex is installed and working:

ethrex --version

Install ethrex (package manager)

Coming soon.

Installing ethrex (docker)

Run Ethrex easily using Docker containers. This guide covers pulling and running official images.

Prerequisites

- Docker installed and running

Pulling the Docker Image

Latest stable release:

docker pull ghcr.io/lambdaclass/ethrex:latest

Latest development build:

docker pull ghcr.io/lambdaclass/ethrex:main

Specific version:

docker pull ghcr.io/lambdaclass/ethrex:<version-tag>

Find available tags in the GitHub repo.

Running the Docker Image

Check the Image

Verify the image is working:

docker run --rm ghcr.io/lambdaclass/ethrex --version

Start an ethrex Node

Run the following command to start a node in the background:

docker run \

--rm \

-d \

-v ethrex:/root/.local/share/ethrex \

-p 8545:8545 \

-p 8551:8551 \

-p 30303:30303 \

-p 30303:30303/udp \

-p 9090:9090 \

--name ethrex \

ghcr.io/lambdaclass/ethrex \

--authrpc.addr 0.0.0.0

What this does:

- Starts a container named

ethrex - Publishes ports:

8545: JSON-RPC server (TCP)8551: Auth JSON-RPC server (TCP)30303: P2P networking (TCP/UDP)9090: Metrics (TCP)

- Mounts the Docker volume

ethrexto persist blockchain data

Tip: You can add more Ethrex CLI arguments at the end of the command as needed.

Managing the Container

View logs:

docker logs -f ethrex

Stop the node:

docker stop ethrex

Building ethrex from source

Build ethrex yourself for maximum flexibility and experimental features.

Prerequisites

- Rust toolchain (use

rustupfor easiest setup) - libclang (for RocksDB)

- Git

- solc (v0.8.31) (for L2 development)

L2 contracts

If you want to install ethrex for L2 development, you may set the COMPILE_CONTRACTS env var, so the binary has the necessary contract code.

export COMPILE_CONTRACTS=true

Install via cargo install

The fastest way to install ethrex from source:

cargo install --locked ethrex --git https://github.com/lambdaclass/ethrex.git

Optional features:

- Add

--features sp1,risc0to enable SP1 and/or RISC0 provers - Add

--features gpufor CUDA GPU support

Install a specific version:

cargo install --locked ethrex --git https://github.com/lambdaclass/ethrex.git --tag <version-tag>

Find available tags in the GitHub repo.

Verify installation:

ethrex --version

Build manually with cargo build

Clone the repository (replace <version-tag> with the desired version):

git clone --branch <version-tag> --depth 1 https://github.com/lambdaclass/ethrex.git

cd ethrex

Build the binary:

cargo build --bin ethrex --release

Optional features:

- Add

--features sp1,risc0to enable SP1 and/or RISC0 provers - Add

--features gpufor CUDA GPU support

The built binary will be in target/release/ethrex.

Verify the build:

./target/release/ethrex --version

(Optional) Move the binary to your $PATH:

sudo mv ./target/release/ethrex /usr/local/bin/

Running an Ethereum Node with ethrex

This section explains how to run an Ethereum L1 node using ethrex. Here you'll find:

- Requirements for running a node (including the need for a consensus client)

- Step-by-step instructions for setup and configuration

- Guidance for both new and experienced users

If you already have a consensus client running, you can skip directly to the node startup instructions. Otherwise, continue to the next section for help setting up a consensus client.

Connecting to a consensus client

Ethrex is an execution client built for Ethereum networks after the merge. As a result, ethrex must operate together with a consensus client to fully participate in the network.

Consensus clients

There are several consensus clients and all of them work with ethrex. When choosing a consensus client we suggest you keep in mind client diversity.

Configuring ethrex

JWT secret

Consensus clients and execution clients communicate through an authenticated JSON-RPC API. The authentication is done through a jwt secret. Ethrex automatically generates the jwt secret and saves it to the current working directory by default. You can also use your own previously generated jwt secret by using the --authrpc.jwtsecret flag or JWTSECRET_PATH environment variable. If the jwt secret at the specified path does not exist ethrex will create it.

Auth RPC server

By default the server is exposed at http://localhost:8551 but both the address and the port can be modified using the --authrpc.addr and --authrpc.port flags respectively.

Example

ethrex --authrpc.jwtsecret path/to/jwt.hex --authrpc.addr localhost --authrpc.port 8551

Node startup

Supported networks

Ethrex is designed to support Ethereum mainnet and its testnets

| Network | Chain id | Supported sync modes |

|---|---|---|

| mainnet | 1 | snap |

| sepolia | 11155111 | snap |

| holesky | 17000 | full, snap |

| hoodi | 560048 | full, snap |

For more information about sync modes please read the sync modes document. Full syncing is the default, to switch to snap sync use the flag --syncmode snap

Run an Ethereum node

This guide will assume that you already installed ethrex and you know how to set up a consensus client to communicate with ethrex.

To sync with mainnet

ethrex --syncmode snap

To sync with sepolia

ethrex --network sepolia --syncmode snap

To sync with holesky

ethrex --network holesky

To sync with hoodi

ethrex --network hoodi

Once started, you should be able to check the sync status with:

curl http://localhost:8545 \

-H 'content-type: application/json' \

-d '{"jsonrpc":"2.0","method":"eth_syncing","params":[],"id":1}'

The answer should be:

{"id":1,"jsonrpc":"2.0","result":{"startingBlock":"0x0","currentBlock":"0x0","highestBlock":"0x0"}}

Run an Ethereum node with Docker

You can simply start a node with a Consensus client and ethrex as Execution client with Docker using the docker-compose.yaml

curl -L -o docker-compose.yaml https://raw.githubusercontent.com/lambdaclass/ethrex/refs/heads/main/docker-compose.yaml

docker compose up

Or you can set a different network:

ETHREX_NETWORK=hoodi docker compose up

For more details and configuration options:

Configuration

This page covers the basic configuration options for running an L1 node with ethrex. Full list of options can be found at the CLI reference

Sync Modes

Ethrex supports different sync modes for node operation:

- full: Downloads and verifies the entire chain.

- snap: Fast sync using state snapshots (recommended for most users).

Set the sync mode with:

ethrex --sync <mode>

File Locations

By default, ethrex stores its data in:

- Linux:

~/.local/share/ethrex - macOS:

~/Library/Application Support/ethrex

You can change the data directory with:

ethrex --datadir <path>

Ports

Default ports used by ethrex:

8545: JSON-RPC (HTTP)8551: Auth JSON-RPC30303: P2P networking (TCP/UDP)9090: Metrics

You can change ports with the corresponding flags: --http.port, --authrpc.port, --p2p.port, --discovery.port, --metrics.port.

All services listen on 0.0.0.0 by default, except for the auth RPC, which listens on 127.0.0.1. This can also be changed with flags (e.g., --http.addr).

Log Levels

Control log verbosity with:

ethrex --log.level <level>

Levels: error, warn, info (default), debug, trace

Dev Mode (Localnet)

For local development and testing, you can use dev mode:

ethrex --dev

This runs a local network with block production and no external peers. This network has a list of predefined accounts with funds for testing purposes.

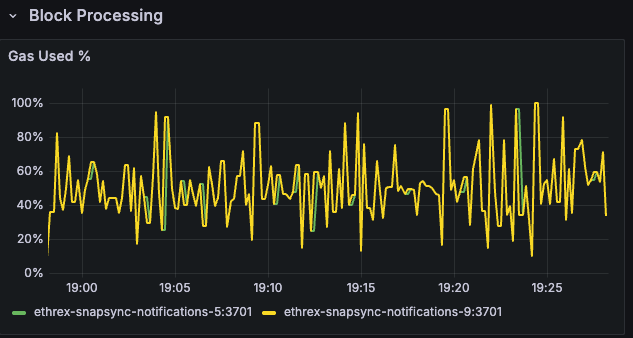

Monitoring and Metrics

Ethrex exposes metrics in Prometheus format on port 9090 by default. But the easiest way to monitor your node is to use the provided Docker Compose stack, which includes Prometheus and Grafana preconfigured. For that we are currently using port 3701, this will match the default in the future but for now if running the containers we expected to have the ethrex metrics exposed on port 3701.

Quickstart: Monitoring Stack with Docker Compose

-

Clone the repository:

git clone https://github.com/lambdaclass/ethrex.git cd ethrex/metrics -

Start the monitoring stack:

# Optional: if you have updated from a previous version, stop first the docker compose. # docker compose -f docker-compose-metrics.yaml -f docker-compose-metrics-l1.overrides.yaml down docker compose -f docker-compose-metrics.yaml -f docker-compose-metrics-l1.overrides.yaml up -d

Note: You might want to restart the docker containers in case of an update from a previous ethrex version to make sure the latest provisioned configurations are applied:

-

Run ethrex with metrics enabled:

Make sure to start ethrex with the

--metricsflag and set the port to3701:ethrex --authrpc.jwtsecret ./secrets/jwt.hex --network hoodi --metrics --metrics.port 3701

This will launch Prometheus and Grafana, already set up to scrape ethrex metrics.

Note: We depend on ethereum-metrics-exporter for some key metrics to define variables on the Grafana dashboards. For it to work properly we need the consensus client to expose its RPC endpoints. For example if you are running lighthouse you may need to add --http and --http-address 0.0.0.0 flags to it before the dashboards pick up all metrics. This won't be needed in the near future

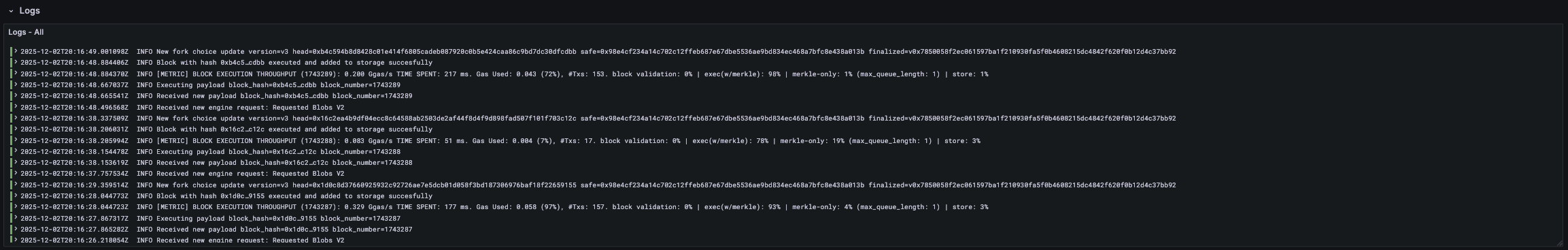

Logs

Ethrex logs are written to stdout by default. To enable file logging, you must specify the --log.dir argument, with this you'll be able to have Promtail collect the logs and send them to Grafana Loki for log visualization.

- Promtail Configuration:

metrics/provisioning/promtail/promtail.yaml

The promtail configuration expects by default that logs are stored in ./logs (relative to the repo root). To correctly see the logs in Grafana, ensure that Promtail can access the logs directory:

- If running via Docker, ensure you map a volume to the log directory and pass

--log.dirto the container. - If running standalone, use

--log.dir ./logswhen running ethrex.

ethrex --log.dir ./logs ...

If you choose to use a different directory, you must set the ETHREX_LOGS_DIR environment variable when running the metrics stack to point to your custom logs directory.

ETHREX_LOGS_DIR=/path/to/your/logs docker compose -f docker-compose-metrics.yaml -f docker-compose-metrics-l1.overrides.yaml up

You can view the logs in Grafana by navigating to the logs row in our dashboard.

Running Docker Container Manually

If you run the ethrex Docker container manually (e.g., docker run ...) or use a custom docker-compose.yaml outside of this repository, you must ensure the logs are accessible to the monitoring stack.

The ethrex container writes logs to the directory specified by --log.dir. You should mount this directory to a location on your host machine that Promtail can access.

Example:

docker run -d \

--name ethrex \

-v $(pwd)/logs:/data/logs \

ghcr.io/lambdaclass/ethrex:main \

--datadir /data \

--log.dir /data/logs

If you are using the provided monitoring stack in metrics/, it expects logs to be in the logs directory at the root of the repository (or ../logs relative to the metrics folder). Ensure your volume mount matches this expectation or update the Promtail volume configuration.

Accessing Metrics and Dashboards

- Prometheus: http://localhost:9091

- Grafana: http://localhost:3001

- Default login:

admin/admin - Prometheus is preconfigured as a data source

- Example dashboards are included in the repo

- Default login:

Metrics from ethrex will be available at http://localhost:3701/metrics in Prometheus format if you followed step 3.

For detailed information on the provided Grafana dashboards, see our L1 Dashboard document.

Custom Configuration

Your ethrex setup may differ from the default configuration. Check your endpoints at provisioning/prometheus/prometheus_l1_sync_docker.yaml.

Also if you have a centralized Prometheus or Grafana setup, you can adapt the provided configuration files to fit your environment, or even stop the docker containers that run Prometheus and/or Grafana leaving only the additional ethereum-metrics-exporter running alongside ethrex to export the metrics to your existing monitoring stack.

docker compose -f docker-compose-metrics.yaml -f docker-compose-metrics-l1.overrides.yaml up -d ethereum-metrics-exporter

For manual setup or more details, see the Prometheus documentation and Grafana documentation.

L1 Architecture

This section covers the internal architecture of ethrex as an Ethereum L1 execution client. It explains how the different components interact, how blocks flow through the system, and the design decisions behind the implementation.

- System Overview - High-level architecture and component interactions

- Block Execution Pipeline - How blocks are validated and executed

- Sync State Machine - Full sync and snap sync algorithms

- Crate Map - Overview of all crates and their responsibilities

System Overview

This document provides a high-level overview of ethrex's L1 architecture as an Ethereum execution client.

Introduction

ethrex is a Rust implementation of an Ethereum execution client. It implements the Ethereum protocol specification, including:

- Block validation and execution

- State management via Merkle Patricia Tries

- P2P networking (devp2p stack)

- JSON-RPC API for external interaction

- Engine API for consensus client communication

High-Level Architecture

┌─────────────────────┐

│ Consensus Client │

│ (Lighthouse, etc) │

└──────────┬──────────┘

│ Engine API

│ (JWT auth)

▼

┌──────────────────────────────────────────────────────────────────────────────┐

│ ethrex Execution Client │

│ │

│ ┌─────────────┐ ┌──────────────┐ ┌────────────────────────────────┐ │

│ │ JSON-RPC │ │ Engine API │ │ P2P Network │ │

│ │ Server │ │ Handler │ │ ┌────────┐ ┌──────────────┐ │ │

│ │ │ │ │ │ │DiscV4 │ │ RLPx │ │ │

│ │ eth_* │ │ engine_* │ │ │ │ │ ┌────────┐ │ │ │

│ │ debug_* │ │ forkchoice │ │ │ │ │ │ eth/68 │ │ │ │

│ │ txpool_* │ │ newPayload │ │ │ │ │ │ snap/1 │ │ │ │

│ │ admin_* │ │ getPayload │ │ │ │ │ └────────┘ │ │ │

│ └──────┬──────┘ └──────┬───────┘ │ └────────┘ └──────────────┘ │ │

│ │ │ └────────────────┬───────────────┘ │

│ │ │ │ │

│ └───────────────────┼──────────────────────────────┘ │

│ │ │

│ ▼ │

│ ┌───────────────────────────────────────────────────────────────────────┐ │

│ │ Blockchain │ │

│ │ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ │ │

│ │ │ Mempool │ │ Payload │ │ Fork Choice │ │ Block │ │ │

│ │ │ │ │ Builder │ │ Update │ │ Pipeline │ │ │

│ │ └─────────────┘ └─────────────┘ └─────────────┘ └─────────────┘ │ │

│ └───────────────────────────────────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ ┌───────────────────────────────────────────────────────────────────────┐ │

│ │ EVM (LEVM) │ │

│ │ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐ │ │

│ │ │Transaction │ │ Opcode │ │ Precompiled│ │ State │ │ │

│ │ │ Execution │ │ Handler │ │ Contracts │ │ Transitions │ │ │

│ │ └─────────────┘ └─────────────┘ └─────────────┘ └─────────────┘ │ │

│ └───────────────────────────────────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ ┌───────────────────────────────────────────────────────────────────────┐ │

│ │ Storage │ │

│ │ ┌───────────────────────────────────────────────────────────────┐ │ │

│ │ │ Store (High-level API) │ │ │

│ │ └───────────────────────────────────────────────────────────────┘ │ │

│ │ │ │ │ │

│ │ ┌──────────┴──────────┐ ┌─────────┴────────┐ │ │

│ │ ▼ ▼ ▼ ▼ │ │

│ │ ┌─────────────┐ ┌─────────────────┐ ┌───────────────┐ │ │

│ │ │ InMemory │ │ RocksDB │ │ State Trie │ │ │

│ │ │ (Testing) │ │ (Production) │ │ (MPT + Flat) │ │ │

│ │ └─────────────┘ └─────────────────┘ └───────────────┘ │ │

│ └───────────────────────────────────────────────────────────────────────┘ │

└──────────────────────────────────────────────────────────────────────────────┘

Core Components

1. Network Layer

The network layer handles all external communication:

JSON-RPC Server (crates/networking/rpc)

- Implements the Ethereum JSON-RPC specification

- Namespaces:

eth_*,debug_*,txpool_*,admin_*,web3_* - Validates and broadcasts incoming transactions

Engine API (crates/networking/rpc/engine)

- Communication channel with the consensus client

- Handles

engine_forkchoiceUpdatedV{1,2,3},engine_newPayloadV{1,2,3},engine_getPayloadV{1,2,3} - JWT authentication for security

- Triggers sync when receiving unknown block hashes

P2P Network (crates/networking/p2p)

- DiscV4: Node discovery protocol for finding peers

- RLPx: Encrypted transport layer for peer communication

- eth/68: Block and transaction propagation protocol

- snap/1: Snap sync protocol for fast state download

2. Blockchain Layer

The blockchain layer manages chain state and block processing:

Blockchain (crates/blockchain)

- Orchestrates block validation and execution

- Manages the mempool for pending transactions

- Handles fork choice updates from the consensus layer

- Coordinates payload building for block production

Mempool

- Stores pending transactions awaiting inclusion

- Filters transactions by gas price, nonce, and validity

- Supports transaction replacement (EIP-1559 and EIP-4844)

- Broadcasts new transactions to peers

Fork Choice

- Implements Ethereum's fork choice rule

- Updates the canonical chain based on consensus client signals

- Handles chain reorganizations

3. Execution Layer

LEVM (Lambda EVM) (crates/vm/levm)

- Custom EVM implementation in Rust

- Executes smart contract bytecode

- Implements all EVM opcodes up to the latest hard fork

- Handles precompiled contracts

Block Execution Pipeline

- Validate block header

- Apply system-level operations (beacon root, block hash storage)

- Execute transactions in order

- Process withdrawals (post-Merge)

- Extract requests (post-Prague)

- Compute state root and verify against header

4. Storage Layer

Store (crates/storage)

- High-level API for all blockchain data

- Supports multiple backends: InMemory (testing), RocksDB (production)

- Manages block headers, bodies, receipts, and state

State Trie (crates/common/trie)

- Merkle Patricia Trie implementation

- Stores account states and contract storage

- Supports flat key-value storage for performance

- Handles trie node caching and persistence

Data Flow

Block Import (from P2P)

P2P Peer → Block Headers/Bodies → Syncer → Blockchain.add_block() → EVM.execute() → Store

- Syncer requests headers from peers

- Headers are validated (parent exists, timestamps, gas limits, etc.)

- Bodies are requested and matched to headers

- Blocks are executed in batches

- State is committed to storage

Block Import (from Consensus Client)

Consensus Client → engine_newPayloadV3 → Blockchain.add_block_pipeline() → EVM.execute() → Store

→ engine_forkchoiceUpdated → Fork Choice Update → Canonical Chain Update

- Consensus client sends new payload via Engine API

- Block is validated and executed

- Fork choice update makes the block canonical

- Sync is triggered if the block's parent is unknown

Transaction Lifecycle

User → JSON-RPC (eth_sendRawTransaction) → Mempool → Broadcast to Peers

→ Include in Block

- Transaction arrives via JSON-RPC or P2P

- Validated for signature, nonce, balance, gas

- Added to mempool if valid

- Broadcast to connected peers

- Eventually included in a block by the payload builder

Sync Modes

Full Sync

Downloads and executes every block from genesis (or a known checkpoint):

- Request block headers from peers

- Request block bodies for each header

- Execute blocks in batches (1024 blocks per batch)

- Commit state after each batch

- Update fork choice when sync head is reached

Snap Sync

Downloads state directly instead of executing all historical blocks:

- Download block headers to find a recent "pivot" block

- Download account state trie leaves via snap protocol

- Download storage tries for accounts with storage

- Heal any missing trie nodes (state may have changed during download)

- Download bytecode for contract accounts

- Execute recent blocks (post-pivot) to catch up

See Sync State Machine for detailed documentation.

Concurrency Model

ethrex uses Tokio for async I/O with the following patterns:

- Async tasks for network I/O (RPC, P2P)

- Blocking tasks for CPU-intensive work (block execution, trie operations)

- Channels for inter-component communication (sync signals, mempool updates)

- RwLock/Mutex for shared state (mempool, peer table)

Configuration

Key configuration options:

| Option | Description | Default |

|---|---|---|

--network | Network to connect to | mainnet |

--datadir | Data directory for DB and keys | ~/.ethrex |

--syncmode | Sync mode (full or snap) | snap |

--authrpc.port | Engine API port | 8551 |

--http.port | JSON-RPC HTTP port | 8545 |

--discovery.port | P2P discovery port | 30303 |

See Configuration for the complete reference.

Next Steps

- Block Execution Pipeline - Deep dive into block processing

- Sync State Machine - Detailed sync algorithm documentation

- Crate Map - Overview of all crates and dependencies

Block Execution Pipeline

This document describes how ethrex validates and executes blocks, from receiving a block to committing state changes.

Overview

Block execution in ethrex follows the Ethereum specification closely. The pipeline handles:

- Block header validation

- System-level operations (beacon root contract, block hash storage)

- Transaction execution

- Withdrawal processing

- Request extraction (post-Prague)

- State root verification

Entry Points

Blocks enter the execution pipeline through two main paths:

1. P2P Sync (Syncer)

During synchronization, blocks are fetched from peers and executed in batches:

#![allow(unused)] fn main() { // crates/networking/p2p/sync.rs Syncer::add_blocks() → Blockchain::add_blocks_in_batch() → execute each block }

2. Engine API (engine_newPayloadV{1,2,3})

Post-Merge, the consensus client sends new blocks via the Engine API:

#![allow(unused)] fn main() { // crates/networking/rpc/engine/payload.rs NewPayloadV3::handle() → Blockchain::add_block() → execute block }

Block Header Validation

Before executing a block, its header is validated:

#![allow(unused)] fn main() { // crates/blockchain/blockchain.rs fn validate_header(header: &BlockHeader, parent: &BlockHeader) -> Result<()> }

Validation Checks

| Check | Description |

|---|---|

| Parent hash | Must match parent block's hash |

| Block number | Must be parent.number + 1 |

| Timestamp | Must be > parent.timestamp |

| Gas limit | Must be within bounds of parent (EIP-1559) |

| Base fee | Must match calculated value (EIP-1559) |

| Difficulty | Must be 0 (post-Merge) |

| Nonce | Must be 0 (post-Merge) |

| Ommers hash | Must be empty hash (post-Merge) |

| Withdrawals root | Must match if Shanghai activated |

| Blob gas fields | Must be present if Cancun activated |

| Requests hash | Must match if Prague activated |

Execution Flow

┌─────────────────────────────────────────────────────────────────────┐

│ Block Execution │

├─────────────────────────────────────────────────────────────────────┤

│ │

│ 1. ┌────────────────────────────────────────────────────────────┐ │

│ │ System Operations (post-Cancun) │ │

│ │ • Store beacon block root (EIP-4788) │ │

│ │ • Store parent block hash (EIP-2935) │ │

│ └────────────────────────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ 2. ┌────────────────────────────────────────────────────────────┐ │

│ │ Transaction Execution │ │

│ │ For each transaction: │ │

│ │ • Validate signature and nonce │ │

│ │ • Check sender balance │ │

│ │ • Execute in EVM │ │

│ │ • Apply gas refunds │ │

│ │ • Update account states │ │

│ │ • Generate receipt │ │

│ └────────────────────────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ 3. ┌────────────────────────────────────────────────────────────┐ │

│ │ Withdrawal Processing (post-Shanghai) │ │

│ │ For each withdrawal: │ │

│ │ • Credit validator address with withdrawal amount │ │

│ └────────────────────────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ 4. ┌────────────────────────────────────────────────────────────┐ │

│ │ Request Extraction (post-Prague) │ │

│ │ • Deposit requests from logs │ │

│ │ • Withdrawal requests from system contract │ │

│ │ • Consolidation requests from system contract │ │

│ └────────────────────────────────────────────────────────────┘ │

│ │ │

│ ▼ │

│ 5. ┌────────────────────────────────────────────────────────────┐ │

│ │ State Finalization │ │

│ │ • Compute state root from account updates │ │

│ │ • Verify against header.state_root │ │

│ │ • Commit changes to storage │ │

│ └────────────────────────────────────────────────────────────┘ │

│ │

└─────────────────────────────────────────────────────────────────────┘

Transaction Execution

Each transaction goes through the following steps:

1. Pre-Execution Validation

#![allow(unused)] fn main() { // crates/blockchain/validate.rs fn validate_transaction(tx: &Transaction, header: &BlockHeader) -> Result<()> }

- Signature recovery and validation

- Nonce check (must match account nonce)

- Gas limit check (must be <= block gas remaining)

- Balance check (must cover

gas_limit * gas_price + value) - Intrinsic gas calculation

- EIP-2930 access list validation

- EIP-4844 blob validation (if applicable)

2. EVM Execution

#![allow(unused)] fn main() { // crates/vm/levm/src/vm.rs VM::execute() → Result<ExecutionReport> }

The EVM executes the transaction bytecode:

- Contract Call: Execute target contract code

- Contract Creation: Deploy new contract, execute constructor

- Transfer: Simple value transfer (no code execution)

During execution:

- Opcodes are decoded and executed

- Gas is consumed for each operation

- State changes are tracked (but not committed)

- Logs are collected

- Errors revert all changes

3. Post-Execution

After EVM execution:

#![allow(unused)] fn main() { // crates/vm/levm/src/vm.rs fn finalize_transaction() -> Receipt }

- Calculate gas refund (max 1/5 of gas used, post-London)

- Credit coinbase with priority fee

- Generate receipt with logs and status

- Update cumulative gas used

State Management

Account Updates

State changes are tracked as AccountUpdate structs:

#![allow(unused)] fn main() { pub struct AccountUpdate { pub address: Address, pub removed: bool, pub info: Option<AccountInfo>, // balance, nonce, code_hash pub code: Option<Bytes>, // bytecode if changed pub added_storage: HashMap<H256, U256>, } }

State Root Computation

After all transactions execute:

#![allow(unused)] fn main() { // crates/storage/store.rs Store::apply_account_updates_batch(parent_hash, updates) -> StateTrieHash }

This is one of the two merkleization backends (the other is used by add_block_pipeline):

- Load parent state trie

- Apply each account update to the trie

- For accounts with storage changes, update storage tries

- Compute new state root

- Verify it matches

header.state_root

Payload Building

When ethrex acts as a block producer (validator), it builds payloads:

#![allow(unused)] fn main() { // crates/blockchain/payload.rs Blockchain::build_payload(template: Block) -> PayloadBuildResult }

Building Process

-

Fetch transactions from mempool, filtered by:

- Base fee (must afford current base fee)

- Blob fee (for EIP-4844 transactions)

- Nonce ordering (consecutive nonces per sender)

-

Order transactions by effective tip (highest first)

-

Execute transactions until:

- Block gas limit reached

- No more valid transactions

- Blob limit reached (for blob transactions)

-

Finalize block:

- Apply withdrawals

- Extract requests

- Compute state root

- Compute receipts root

- Generate logs bloom

Payload Rebuilding

Payloads are rebuilt continuously until requested:

#![allow(unused)] fn main() { // crates/blockchain/payload.rs Blockchain::build_payload_loop(payload, cancel_token) }

This maximizes MEV by including the most profitable transactions available.

Error Handling

Block execution can fail for various reasons:

| Error | Cause | Recovery |

|---|---|---|

InvalidBlock::InvalidStateRoot | Computed state root doesn't match header | Reject block |

InvalidBlock::InvalidGasUsed | Gas used doesn't match header | Reject block |

InvalidBlock::InvalidTransaction | Transaction validation failed | Reject block |

EvmError::OutOfGas | Transaction ran out of gas | Revert transaction, continue block |

EvmError::InvalidOpcode | Unknown opcode encountered | Revert transaction, continue block |

Performance Considerations

Batch Execution

During sync, blocks are executed in batches (default 1024 blocks):

#![allow(unused)] fn main() { // crates/networking/p2p/sync.rs const EXECUTE_BATCH_SIZE: usize = 1024; }

This reduces database commits and improves throughput.

Parallel Trie Operations

Storage trie updates can be parallelized across accounts:

#![allow(unused)] fn main() { // Uses rayon for parallel iteration account_updates.par_iter().map(|update| update_storage_trie(update)) }

State Caching

The EVM maintains a cache of accessed accounts and storage slots to minimize database reads during execution.

Hard Fork Handling

Block execution adapts based on the active hard fork:

#![allow(unused)] fn main() { // crates/common/types/chain_config.rs impl ChainConfig { pub fn fork(&self, timestamp: u64) -> Fork { ... } pub fn is_cancun_activated(&self, timestamp: u64) -> bool { ... } pub fn is_prague_activated(&self, timestamp: u64) -> bool { ... } } }

Each fork may introduce:

- New opcodes (e.g.,

PUSH0in Shanghai) - New precompiles (e.g., point evaluation in Cancun)

- New system contracts (e.g., beacon root contract in Cancun)

- Changed gas costs

- New transaction types

Related Documentation

- LEVM Documentation - EVM implementation details

- Sync State Machine - How blocks flow during sync

- Crate Map - Overview of involved crates

Sync State Machine

This document describes the synchronization algorithms implemented in ethrex, including full sync and snap sync.

Overview

ethrex supports two synchronization modes:

| Mode | Description | Use Case |

|---|---|---|

| Full Sync | Downloads and executes every block | Maximum security, slower |

| Snap Sync | Downloads state directly, executes recent blocks | Faster initial sync |

Sync Manager Architecture

┌─────────────────────────────────────────────────────────────────┐

│ SyncManager │

│ • Receives sync targets from Engine API / P2P │

│ • Tracks current sync mode (Full / Snap) │

│ • Coordinates Syncer for actual sync work │

└──────────────────────────────┬──────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────┐

│ Syncer │

│ • Executes sync cycles │

│ • Manages peer connections via PeerHandler │

│ • Handles both full and snap sync algorithms │

└─────────────────────────────────────────────────────────────────┘

Sync Triggers

Synchronization is triggered by:

- Engine API:

engine_forkchoiceUpdatedwith unknown head hash - P2P: Receiving block announcements for unknown blocks

- Startup: When local chain is behind network

#![allow(unused)] fn main() { // crates/networking/rpc/engine/fork_choice.rs match apply_fork_choice(...) { Err(InvalidForkChoice::Syncing) => { syncer.sync_to_head(fork_choice_state.head_block_hash); // Return SYNCING status to consensus client } } }

Full Sync Algorithm

Full sync downloads blocks from the network and executes each one to reconstruct the state.

State Machine

┌─────────────────┐

│ START SYNC │

└────────┬────────┘

│

▼

┌─────────────────┐

┌─────────│ Request Headers │◄─────────────┐

│ └────────┬────────┘ │

│ │ │

│ ▼ │

│ ┌─────────────────┐ │

│ │ Validate Headers│ │

│ └────────┬────────┘ │

│ │ │

│ ▼ │

│ ┌─────────────────┐ │

│ │ Found Canonical │──No──────────┘

│ │ Ancestor? │

│ └────────┬────────┘

│ │ Yes

│ ▼

│ ┌─────────────────┐

│ │ Request Bodies │◄─────────────┐

│ └────────┬────────┘ │

│ │ │

│ ▼ │

│ ┌─────────────────┐ │

│ │ Execute Batch │ │

│ │ (1024 blocks) │ │

│ └────────┬────────┘ │

│ │ │

│ ▼ │

│ ┌─────────────────┐ │

│ │ More Blocks? │──Yes─────────┘

│ └────────┬────────┘

│ │ No

│ ▼

│ ┌─────────────────┐

└─Error───│ SYNC DONE │

└─────────────────┘

Algorithm Details

#![allow(unused)] fn main() { // crates/networking/p2p/sync.rs async fn sync_cycle_full(sync_head: H256, store: Store) -> Result<()> }

-

Find Chain Link

- Request headers backwards from sync_head

- Stop when reaching a canonical block (already known)

- This identifies the fork point

-

Store Headers

- Save all new headers to temporary storage

- Headers are stored in batches during download

-

Download Bodies

- Request bodies for stored headers

- Match bodies to headers by hash

- Maximum 64 bodies per request

-

Execute Blocks

- Execute in batches of 1024 blocks

- Each block is fully validated and executed

- State is committed after each batch

-

Update Fork Choice

- After all blocks executed, update canonical chain

- Set new head, safe, and finalized blocks

Key Constants

#![allow(unused)] fn main() { const EXECUTE_BATCH_SIZE: usize = 1024; // Blocks per execution batch const MAX_BLOCK_BODIES_TO_REQUEST: usize = 64; // Bodies per request }

Snap Sync Algorithm

Snap sync downloads state directly from peers instead of executing all historical blocks.

State Machine

┌─────────────────────────────────────────────────────────────────────────────┐

│ SNAP SYNC STATE MACHINE │

└─────────────────────────────────────────────────────────────────────────────┘

┌──────────────┐

│ START SNAP │

│ SYNC │

└──────┬───────┘

│

▼

┌──────────────┐ ┌─────────────────────────────────────────────────────┐

│ Download │ │ Download headers to find sync head │

│ Headers │────▶│ Store hashes for later body download │

└──────┬───────┘ └─────────────────────────────────────────────────────┘

│

▼

┌──────────────┐ ┌─────────────────────────────────────────────────────┐

│ Select Pivot │────▶│ Choose recent block as pivot (must not be stale) │

│ Block │ │ Pivot block is target for state download │

└──────┬───────┘ └─────────────────────────────────────────────────────┘

│

▼

┌──────────────┐ ┌─────────────────────────────────────────────────────┐

│ Download │────▶│ Request account ranges via SNAP protocol │

│ Accounts │ │ Store account states to disk as snapshots │

└──────┬───────┘ └─────────────────────────────────────────────────────┘

│

▼

┌──────────────┐ ┌─────────────────────────────────────────────────────┐

│ Insert │────▶│ Build account trie from downloaded leaves │

│ Accounts │ │ Identify accounts with non-empty storage │

└──────┬───────┘ └─────────────────────────────────────────────────────┘

│

▼

┌──────────────┐ ┌─────────────────────────────────────────────────────┐

│ Download │────▶│ For each account with storage: │

│ Storage │ │ Request storage ranges and build storage tries. │

│ │ │ Includes a healing loop to fix state trie changes. │

└──────┬───────┘ └─────────────────────────────────────────────────────┘

│

▼

┌──────────────┐ ┌─────────────────────────────────────────────────────┐

│ Heal │────▶│ Heal state trie (fill missing nodes) │

│ Tries │ │ Heal storage tries for modified accounts │

└──────┬───────┘ └─────────────────────────────────────────────────────┘

│

▼

┌──────────────┐ ┌─────────────────────────────────────────────────────┐

│ Download │────▶│ Download bytecode for all contract accounts │

│ Bytecode │ │ Match by code hash │

└──────┬───────┘ └─────────────────────────────────────────────────────┘

│

▼

┌──────────────┐

│ SNAP SYNC │

│ COMPLETE │

└──────┬───────┘

│

▼

┌──────────────┐ ┌─────────────────────────────────────────────────────┐

│ Switch to │────▶│ Execute recent blocks from pivot to head │

│ Full Sync │ │ Continue with full sync for new blocks │

└──────────────┘ └─────────────────────────────────────────────────────┘

Phase 1: Header Download

Download all block headers from current head to sync target:

#![allow(unused)] fn main() { // crates/networking/p2p/sync.rs async fn sync_cycle_snap(sync_head: H256, store: Store) -> Result<()> }

- Request headers in batches

- Store header hashes for later use

- Identify pivot block (recent block whose state we'll download)

Phase 2: Pivot Selection

The pivot block must be:

- Recent enough to have state available on peers

- Not "stale" (older than SNAP_LIMIT * 12 seconds)

#![allow(unused)] fn main() { // crates/networking/p2p/sync.rs fn block_is_stale(header: &BlockHeader) -> bool { calculate_staleness_timestamp(header.timestamp) < current_unix_time() } const SNAP_LIMIT: usize = 128; // Blocks before pivot is considered stale }

If the pivot becomes stale during sync, a new pivot is selected:

#![allow(unused)] fn main() { async fn update_pivot(block_number: u64, ...) -> Result<BlockHeader> }

Phase 3: Account Download

Download all account states at the pivot block:

#![allow(unused)] fn main() { // Uses SNAP protocol GetAccountRange messages peers.request_account_range(start_hash, end_hash, snapshot_dir, pivot_header) }

- Accounts are saved to disk as RLP-encoded snapshots

- Each snapshot file contains a batch of (hash, account_state) pairs

- Process tracks code hashes for later bytecode download

Phase 4: Account Trie Construction

Build the account state trie from downloaded leaves:

#![allow(unused)] fn main() { async fn insert_accounts(store, storage_accounts, snapshots_dir, ...) -> (H256, accounts_with_storage) }

For RocksDB backend:

- Ingest snapshot files directly via SST ingestion

- Build trie using sorted insertion algorithm

- Track accounts with non-empty storage root

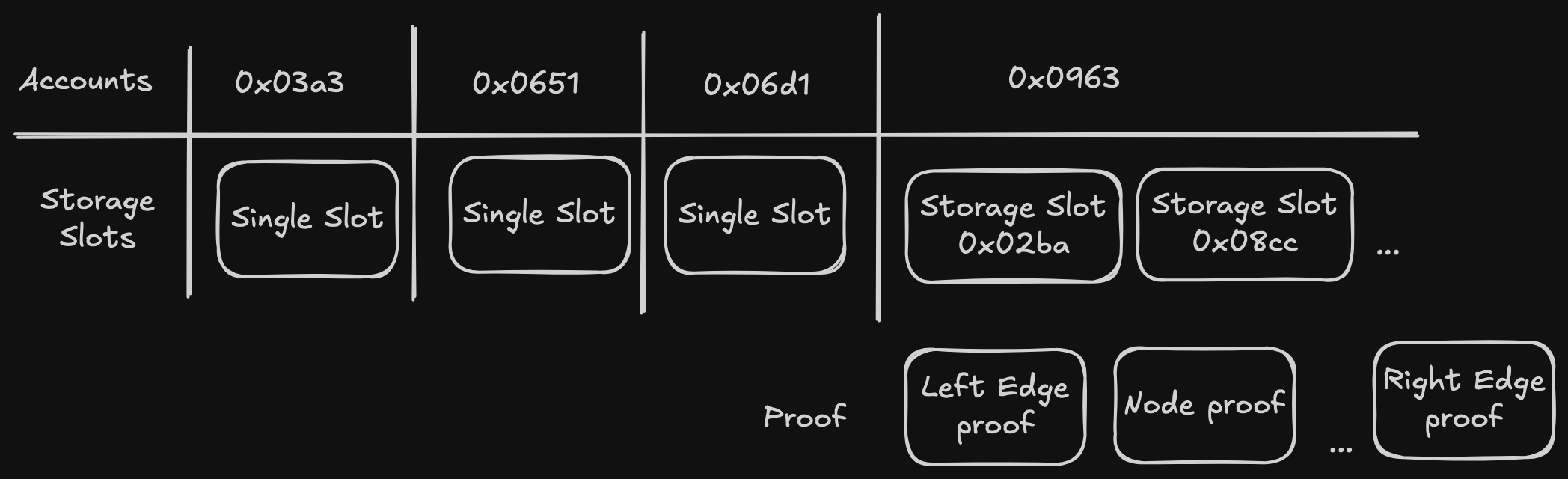

Phase 5: Storage Download

For each account with storage, download storage slots:

#![allow(unused)] fn main() { peers.request_storage_ranges(storage_accounts, snapshots_dir, chunk_index, pivot_header) }

- Multiple accounts can be requested per message

- Large accounts are downloaded in chunks

- "Big accounts" (>4096 slots) are marked for healing instead

Phase 6: Trie Healing

State may have changed while downloading. Healing fixes inconsistencies:

State Trie Healing:

#![allow(unused)] fn main() { async fn heal_state_trie_wrap(state_root, store, peers, deadline, ...) -> bool }

- Walk trie from root

- Request missing nodes from peers

- Fill in gaps caused by state changes

Storage Trie Healing:

#![allow(unused)] fn main() { async fn heal_storage_trie(state_root, accounts, peers, store, ...) -> bool }

- For each account marked for healing

- Request missing storage trie nodes

- Verify storage roots match account state

Phase 7: Bytecode Download

Download contract bytecode:

#![allow(unused)] fn main() { peers.request_bytecodes(&code_hashes) }

- Code hashes collected during account download

- Bytecode downloaded in chunks (50,000 per batch)

- Verified by hashing and comparing to code_hash

Phase 8: Transition to Full Sync

After snap sync completes:

- Store pivot block body

- Update fork choice to pivot

- Switch sync mode to Full

- Execute any remaining blocks normally

P2P Protocols Used

eth/68 Protocol

Used for block header and body download:

| Message | Purpose |

|---|---|

GetBlockHeaders | Request headers by number or hash |

BlockHeaders | Response with headers |

GetBlockBodies | Request bodies by hash |

BlockBodies | Response with bodies |

snap/1 Protocol

Used for state download during snap sync:

| Message | Purpose |

|---|---|

GetAccountRange | Request accounts in hash range |

AccountRange | Response with accounts and proof |

GetStorageRanges | Request storage for accounts |

StorageRanges | Response with storage and proofs |

GetByteCodes | Request bytecode by hash |

ByteCodes | Response with bytecode |

GetTrieNodes | Request specific trie nodes |

TrieNodes | Response with nodes |

Error Recovery

Recoverable Errors

These errors cause sync to retry:

- Peer disconnection

- Invalid response from peer

- Timeout waiting for response

- Database errors (transient)

Non-Recoverable Errors

These errors cause sync to abort with warning:

- Snapshot file corruption

- Database corruption

- State root mismatch after healing

#![allow(unused)] fn main() { // crates/networking/p2p/sync.rs impl SyncError { pub fn is_recoverable(&self) -> bool { match self { SyncError::Chain(_) | SyncError::Store(_) | ... => true, SyncError::CorruptDB | SyncError::SnapshotDecodeError(_) | ... => false, } } } }

Performance Optimizations

Parallel Operations

- Account trie insertion uses Rayon for parallelism

- Storage tries built in parallel across accounts

- Bytecode downloads are batched

Disk I/O

- Snapshot files written in batches to reduce writes

- RocksDB SST ingestion for fast account loading

- Temporary directories cleaned up after sync

Network

- Multiple peers used concurrently

- Peer scoring based on response time and validity

- Automatic peer rotation for failed requests

Metrics

Sync progress is tracked via metrics:

#![allow(unused)] fn main() { // crates/networking/p2p/metrics.rs METRICS.account_tries_inserted // Accounts added to trie METRICS.storage_leaves_inserted // Storage slots added METRICS.current_step // Current sync phase METRICS.sync_head_hash // Current sync target }

Configuration

| Option | Description | Default |

|---|---|---|

--syncmode | Sync mode (full or snap) | snap |

EXECUTE_BATCH_SIZE | Blocks per batch (env var) | 1024 |

MIN_FULL_BLOCKS | Min blocks to full sync in snap mode | 10,000 |

Related Documentation

- Snap Sync Internals - Detailed snap sync documentation

- Block Execution Pipeline - How blocks are executed

- Networking - P2P protocol details

Note: For comprehensive snap sync documentation, see Snap Sync Internals.

Crate Map

This document provides an overview of all crates in the ethrex monorepo and their responsibilities.

Crate Dependency Graph

┌─────────────────────────────────────┐

│ cmd/ethrex │

│ (Main binary entry point) │

└───────────────┬─────────────────────┘

│

┌─────────────────────────┼─────────────────────────┐

│ │ │

▼ ▼ ▼

┌───────────────────┐ ┌───────────────────┐ ┌───────────────────┐

│ networking/rpc │ │ networking/p2p │ │ blockchain │

│ (JSON-RPC API) │ │ (P2P networking) │ │ (Chain management)│

└─────────┬─────────┘ └─────────┬─────────┘ └─────────┬─────────┘

│ │ │

│ │ │

└─────────────────────────┼─────────────────────────┘

│

▼

┌─────────────────────────────┐

│ vm/levm │

│ (EVM implementation) │

└─────────────┬───────────────┘

│

▼

┌─────────────────────────────┐

│ storage │

│ (Data persistence) │

└─────────────┬───────────────┘

│

┌───────────────────────┼───────────────────────┐

│ │ │

▼ ▼ ▼

┌───────────────────┐ ┌───────────────────┐ ┌───────────────────┐

│ common/trie │ │ common/rlp │ │ common/types │

│ (Merkle Patricia) │ │ (RLP encoding) │ │ (Core data types) │

└───────────────────┘ └───────────────────┘ └───────────────────┘

Core Crates

ethrex-common

Purpose: Core data types and utilities shared across all crates.

Key Modules:

types/- Block, Transaction, Receipt, Account typestrie/- Merkle Patricia Trie implementationrlp/- RLP encoding/decodingcrypto/- Keccak hashing, signature recovery

Notable Types:

#![allow(unused)] fn main() { pub struct Block { header: BlockHeader, body: BlockBody } pub struct Transaction { /* variants for Legacy, EIP-2930, EIP-1559, EIP-4844, EIP-7702 */ } pub struct AccountState { nonce: u64, balance: U256, storage_root: H256, code_hash: H256 } }

ethrex-storage

Purpose: Persistent storage layer with multiple backend support.

Key Components:

Store- High-level API for all blockchain dataStoreEnginetrait - Backend abstractionInMemoryStore- Testing backendRocksDBStore- Production backend

Stored Data:

| Table | Contents |

|---|---|

block_numbers | Block hash → block number |

canonical_block_hashes | Block number → canonical hash |

headers | Block hash → BlockHeader |

bodies | Block hash → BlockBody |

receipts | Block hash + index → Receipt |

account_trie_nodes | Node hash → trie node data |

storage_trie_nodes | Node hash → trie node data |

account_codes | Code hash → bytecode |

account_flatkeyvalue | Account flat key-value store |

storage_flatkeyvalue | Storage flat key-value store |

ethrex-blockchain

Purpose: Chain management, block validation, and mempool.

Key Components:

Blockchain- Main orchestrator for chain operationsMempool- Pending transaction poolfork_choice- Fork choice rule implementationpayload- Block building for validatorsvalidate- Block and transaction validation

Public API:

#![allow(unused)] fn main() { impl Blockchain { pub fn add_block(&self, block: Block) -> Result<(), ChainError> pub fn add_block_pipeline(&self, block: Block) -> Result<(), ChainError> pub fn validate_transaction(&self, tx: &Transaction) -> Result<(), MempoolError> pub fn build_payload(&self, template: Block) -> Result<PayloadBuildResult, ChainError> pub fn get_payload(&self, id: u64) -> Result<PayloadBuildResult, ChainError> } }

ethrex-vm / levm

Purpose: Ethereum Virtual Machine implementation.

Key Components:

VM- Main EVM execution engineEvmtrait - VM interface for different contexts- Opcode handlers (one per EVM opcode)

- Precompiled contracts

- Gas metering

Execution Flow:

#![allow(unused)] fn main() { impl VM { pub fn execute(&mut self) -> Result<ExecutionReport, VMError> fn execute_opcode(&mut self, opcode: u8) -> Result<(), VMError> fn call(&mut self, ...) -> Result<CallOutcome, VMError> fn create(&mut self, ...) -> Result<CreateOutcome, VMError> } }

ethrex-networking/rpc

Purpose: JSON-RPC API server.

Supported Namespaces:

eth_*- Standard Ethereum methodsdebug_*- Debugging and tracingtxpool_*- Mempool inspectionadmin_*- Node administrationengine_*- Consensus client communicationweb3_*- Web3 utilities

Architecture:

#![allow(unused)] fn main() { pub trait RpcHandler: Send + Sync { fn parse(params: &Option<Vec<Value>>) -> Result<Self, RpcErr>; async fn handle(&self, context: RpcApiContext) -> Result<Value, RpcErr>; } }

ethrex-networking/p2p

Purpose: Peer-to-peer networking stack.

Protocol Layers:

- DiscV4 - Node discovery

- RLPx - Encrypted transport

- eth/68 - Ethereum wire protocol

- snap/1 - Snap sync protocol

Key Components:

PeerHandler- Manages peer connectionsPeerTable- Tracks known peers and their scoresSyncer- Synchronization state machineSyncManager- Coordinates sync operations

Supporting Crates

ethrex-common/trie

Purpose: Merkle Patricia Trie implementation.

Features:

- Standard MPT operations (get, insert, delete)

- Proof generation and verification

- Sorted insertion for snap sync

- Flat key-value store integration

ethrex-common/rlp

Purpose: Recursive Length Prefix encoding.

Traits:

#![allow(unused)] fn main() { pub trait RLPEncode { fn encode(&self, buf: &mut dyn BufMut); fn encode_to_vec(&self) -> Vec<u8>; } pub trait RLPDecode: Sized { fn decode(rlp: &[u8]) -> Result<Self, RLPDecodeError>; fn decode_unfinished(rlp: &[u8]) -> Result<(Self, &[u8]), RLPDecodeError>; } }

ethrex-metrics

Purpose: Prometheus metrics collection.

Metric Categories:

- Block metrics (height, gas, execution time)

- Transaction metrics (types, counts, errors)

- P2P metrics (peers, messages, sync progress)

- RPC metrics (requests, latency)

ethrex-crypto

Purpose: Cryptographic primitives.

Features:

- Keccak-256 hashing

- ECDSA signature recovery

- BLS signatures (for beacon chain)

L2-Specific Crates

ethrex-l2

Purpose: L2 sequencer and prover integration.

Components:

- Sequencer logic

- State diff computation

- Prover interface

- L1 interaction (deposits, withdrawals)

ethrex-prover

Purpose: Zero-knowledge proof generation.

Supported Provers:

- SP1 (Succinct)

- RISC0

- TDX (Trusted Execution)

Test and Development Crates

ef-tests

Purpose: Ethereum Foundation test runner.

Runs official Ethereum tests to verify protocol compliance.

ethrex-dev

Purpose: Development mode utilities.

Features:

- Local development network

- Block import from files

- Test fixtures

Crate Features

Many crates support feature flags:

| Crate | Feature | Effect |

|---|---|---|

ethrex-storage | rocksdb | Enable RocksDB backend |

ethrex-blockchain | metrics | Enable Prometheus metrics |

ethrex-networking/p2p | sync-test | Testing utilities for sync |

ethrex-networking/p2p | experimental-discv5 | Enable discv5 node discovery (experimental) |

Adding New Functionality

When adding new features, consider:

-

Where does it belong?

- Pure data types →

ethrex-common - Database operations →

ethrex-storage - EVM changes →

ethrex-vm - Chain logic →

ethrex-blockchain - API endpoints →

ethrex-networking/rpc - P2P messages →

ethrex-networking/p2p

- Pure data types →

-

Dependency direction

- Lower-level crates should not depend on higher-level ones

- Common types flow down, behaviors flow up

-

Testing

- Unit tests in the crate

- Integration tests in

tests/directory - EF tests for protocol compliance

Related Documentation

- System Overview - How crates work together

- Block Execution - Execution flow across crates

- Sync State Machine - Sync implementation details

Fundamentals

This section covers the core concepts and technical details behind ethrex as an Ethereum execution client. Here you'll find explanations about sync modes, networking, databases, security, and more.

note

This section is a work in progress and will be updated with more content and examples soon.

Databases

Ethrex uses a versioning system to ensure we don't run on invalid data if we restart the node after a breaking change to the DB structure. This system consists of a STORE_SCHEMA_VERSION constant, defined in crates/storage/lib.rs that must be increased after any breaking change and that is checked every time we start the node.

Networking

The network crate handles the ethereum networking protocols. This involves:

- Discovery protocol: built on top of udp and it is how we discover new nodes.

- devP2P: sits on top of tcp and is where the actual blockchain information exchange happens.

Implementation follows the official spec which can be found here. Also, we've been inspired by some geth code.

Discovery protocol

In the next section, we'll be looking at the discovery protocol (discv4 to be more specific) and the way we have it set up. There are many points for improvement and here we discuss some possible solutions to them.

At startup, the discovery server launches three concurrent tokio tasks:

- The listen loop for incoming requests.

- A revalidation loop to ensure peers remain responsive.

- A recursive lookup loop to request new peers and keep our table filled.

Before starting these tasks, we run a startup process to connect to an array of initial nodes.

Before diving into what each task does, first, we need to understand how we are storing our nodes. Nodes are stored in an in-memory matrix which we call a Kademlia table, though it isn't really a Kademlia table as we don't thoroughly follow the spec but we take it as a reference, you can read more here. This table holds:

- Our

node_id: The node's unique identifier computed by obtaining the keccak hash of the 64 bytes starting from index 1 of the encoded pub key. - A vector of 256

buckets which holds:peers: a vector of 16 elements of typePeersDatawhere we save the node record and other related data that we'll see later.replacements: a vector of 16 elements ofPeersDatathat are not connected to us, but we consider them as potential replacements for those nodes that have disconnected from us.

Peers are not assigned to any bucket but they are assigned based on its to our node_id. Distance is defined by:

#![allow(unused)] fn main() { pub fn distance(node_id_1: H512, node_id_2: H512) -> usize { let xor = node_id_1 ^ node_id_2; let distance = U256::from_big_endian(xor.as_bytes()); distance.bits().saturating_sub(1) } }

Startup

Before starting the server, we do a startup where we connect to an array of seeders or bootnodes. This involves:

- Receiving bootnodes via CLI params

- Inserting them into our table

- Pinging them to notify our presence, so they acknowledge us.

This startup is far from being completed. The current state allows us to do basic tests and connections. Later, we want to do a real startup by first trying to connect to those nodes we were previously connected. For that, we'd need to store nodes on the database. If those nodes aren't enough to fill our table, then we also ping some bootnodes, which could be hardcoded or received through the cli. Current issues are opened regarding startup and nodes db.

Listen loop

The listen loop handles messages sent to our socket. The spec defines 6 types of messages:

- Ping: Responds with a

pongmessage. If the peer is not in our table we add it, if the corresponding bucket is already filled then we add it as a replacement for that bucket. If it was inserted we send apingfrom our end to get an endpoint proof. - Pong: Verifies that the

pongcorresponds to a previously sentping, if so we mark the peer as proven. - FindNodes: Responds with a

neighborsmessage that contains as many as the 16 closest nodes from the given target. A target is a pubkey provided by the peer in the message. The response can't be sent in one packet as it might exceed the discv4 max packet size. So we split it into different packets. - Neighbors: First we verify that we have sent the corresponding

find_nodemessage. If so, we receive the peers, store them, and ping them. Also, everyfind_noderequest may have a tokioSenderattached, if that is the case, we forward the nodes from the message through the channel. This becomes useful when waiting for afind_noderesponse, something we do in the lookups. - ENRRequest: currently not implemented see here.

- ENRResponse: same as above.

Re-validations

Re-validations are tasks that are implemented as intervals, that is: they run an action every x whichever unit of time (currently configured to run every 30 seconds). The current flow of re-validation is as follows

- Every 30 seconds (by default) we ping the three least recently pinged peers: this may be fine now to keep simplicity, but we might prefer to choose three random peers instead to avoid the search which might become expensive as our buckets start to fill with more peers.

- In the next iteration we check if they have answered

- if they have: we increment the liveness field by one.

- otherwise: we decrement the liveness by a third of its value.

- If the liveness field is 0, we delete it and insert a new one from the replacements table.

Liveness checks are not part of the spec but are taken from geth, see here. This field is useful because it provides us with good criteria of which nodes are connected and we "trust" more. This trustiness is useful when deciding if we want to store this node in the database to use it as a future seeder or when establishing a connection in p2p.

Re-validations are another point of potential improvement. While it may be fine for now to keep simplicity at max, pinging the last recently pinged peers becomes quite expensive as the number of peers in the table increases. And it also isn't very "just" in selecting nodes so that they get their liveness increased so we trust them more and we might consider them as a seeder. A possible improvement could be:

- Keep two lists: one for nodes that have already been pinged, and another one for nodes that have not yet been revalidated. Let's call the former "a" and the second "b".

- In the beginning, all nodes would belong to "a" and whenever we insert a new node, they would be pushed to "a".

- We would have two intervals: one for pinging "a" and another for pinging to nodes in "b". The "b" would be quicker, as no initial validation has been done.

- When picking a node to ping, we would do it randomly, which is the best form of justice for a node to become trusted by us.

- When a node from

bresponds successfully, we move it toa, and when one fromadoes not respond, we move it tob.

This improvement follows somewhat what geth does, see here.

Recursive Lookups

Recursive lookups are as with re-validations implemented as intervals. Their current flow is as follows:

- Every 30min we spawn three concurrent lookups: one closest to our pubkey and two others closest to randomly generated pubkeys.

- Every lookup starts with the closest nodes from our table. Each lookup keeps track of:

- Peers that have already been asked for nodes

- Peers that have been already seen

- Potential peers to query for nodes: a vector of up to 16 entries holding the closest peers to the pubkey. This vector is initially filled with nodes from our table.

- We send a

find_nodeto the closest 3 nodes (that we have not yet asked) from the pubkey. - We wait for the neighbors' response and push or replace those who are closer to the potential peers.

- We select three other nodes from the potential peers vector and do the same until one lookup has no node to ask.

The way to do lookups isn't part of the spec. Our implementation aligns with geth approach, see here.

An example of how you might build a network

Finally, here is an example of how you could build a network and see how they connect to each other:

We'll have three nodes: a, b, and c, we'll start a, then b setting a as a bootnode, and finally we'll start c with b as bootnode we should see that c connects to both a and b and so all the network should be connected.

node a:

cargo run --release -- --network ./fixtures/genesis/kurtosis.json

We get the enode by querying the node_info and using jq:

curl -s http://localhost:8545 \

-X POST \

-H "Content-Type: application/json" \

--data '{"jsonrpc":"2.0","method":"admin_nodeInfo","params":[],"id":1}' \

| jq '.result.enode'

node b:

We start a new server passing the enode from node a as an argument. Also changing the database dir and the ports is needed to avoid conflicts.

cargo run --release -- --network ./fixtures/genesis/kurtosis.json --bootnodes=`NODE_A_ENODE` \

--datadir=ethrex_b --authrpc.port=8552 --http.port=8546 --p2p.port=30305 --discovery.port=30306

node c

Finally, with node_c we connect to node_b. When the lookup runs, node_c should end up connecting to node_a:

cargo run --release -- --network ./fixtures/genesis/kurtosis.json --bootnodes=`NODE_B_ENODE` \

--datadir=ethrex_c --authrpc.port=8553 --http.port=8547 --p2p.port=30308 --discovery.port=30310

We get the enode by querying the node_info and using jq:

curl -s http://localhost:8546 \

-X POST \

-H "Content-Type: application/json" \

--data '{"jsonrpc":"2.0","method":"admin_nodeInfo","params":[],"id":1}' \

| jq '.result.enode'

You could also spawn nodes from other clients and it should work as well.

Sync Modes

Full sync

Full syncing works by downloading and executing every block from genesis. This means that full syncing will only work for networks that started after The Merge, as ethrex only supports post merge execution.

Snap sync

For snap sync, you can view the main document here.

Snap Sync

API reference: https://github.com/ethereum/devp2p/blob/master/caps/snap.md

Terminology:

- Peers: Other Ethereum execution clients we are connected to, and which can respond to snap requests.

- Pivot: The block we have chosen to snap-sync to. The pivot block changes continually as it becomes too old, because nodes don't serve old data (i.e. more than 128 blocks in the past). Read below for more details on this.

Concept

What

Executing all blocks to rebuild the state is slow. It is also not possible on ethrex, because we don’t support pre-merge execution. Therefore, we need to download the current state from our peers. The largest challenge in snap sync is downloading the state (the account state trie and storage state tries). Secondary concerns are downloading headers and bytecodes.

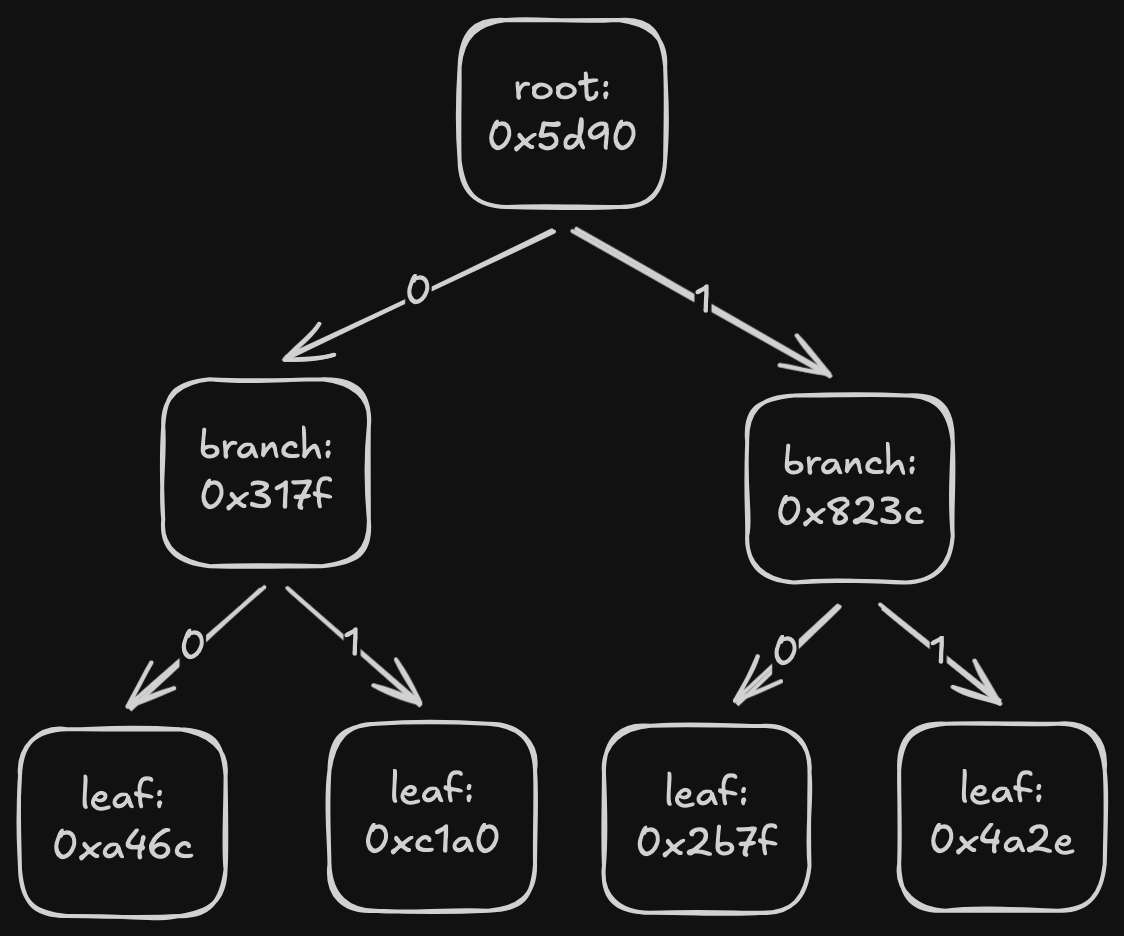

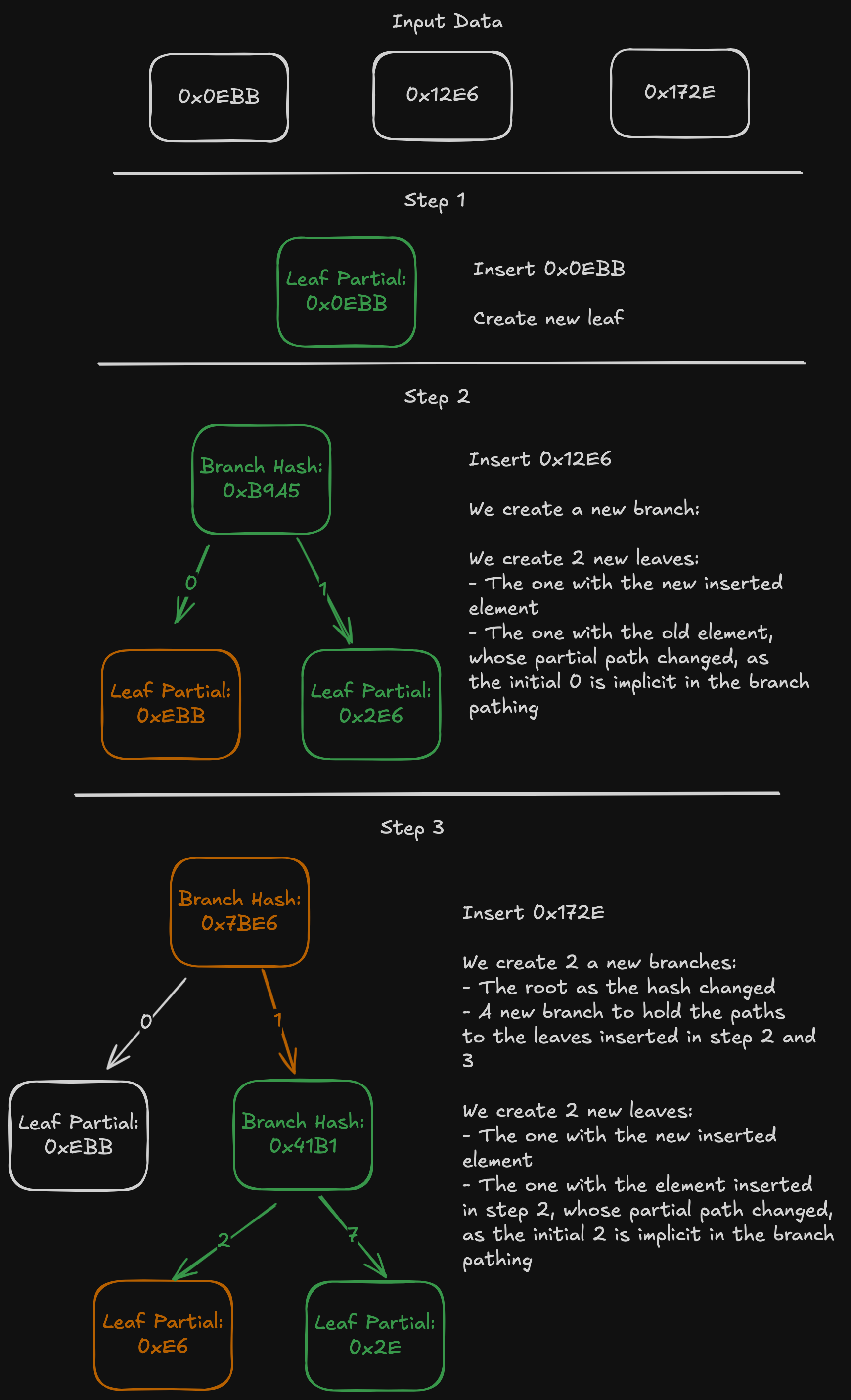

First solution: Fast-Sync

Fast-sync is the original method used in Ethereum to download a Patricia Merkle trie. The idea is to download the trie top-down, starting from the root, then recursively downloading child nodes until the entire trie is obtained.

There are two problems with this:

- Peers stop responding to node requests at some point. When requesting a trie node, you specify the state root for which you want the node. If the root is 128 or more blocks old, peers will not serve the request.

- Scanning the entire trie to find missing nodes is slow.

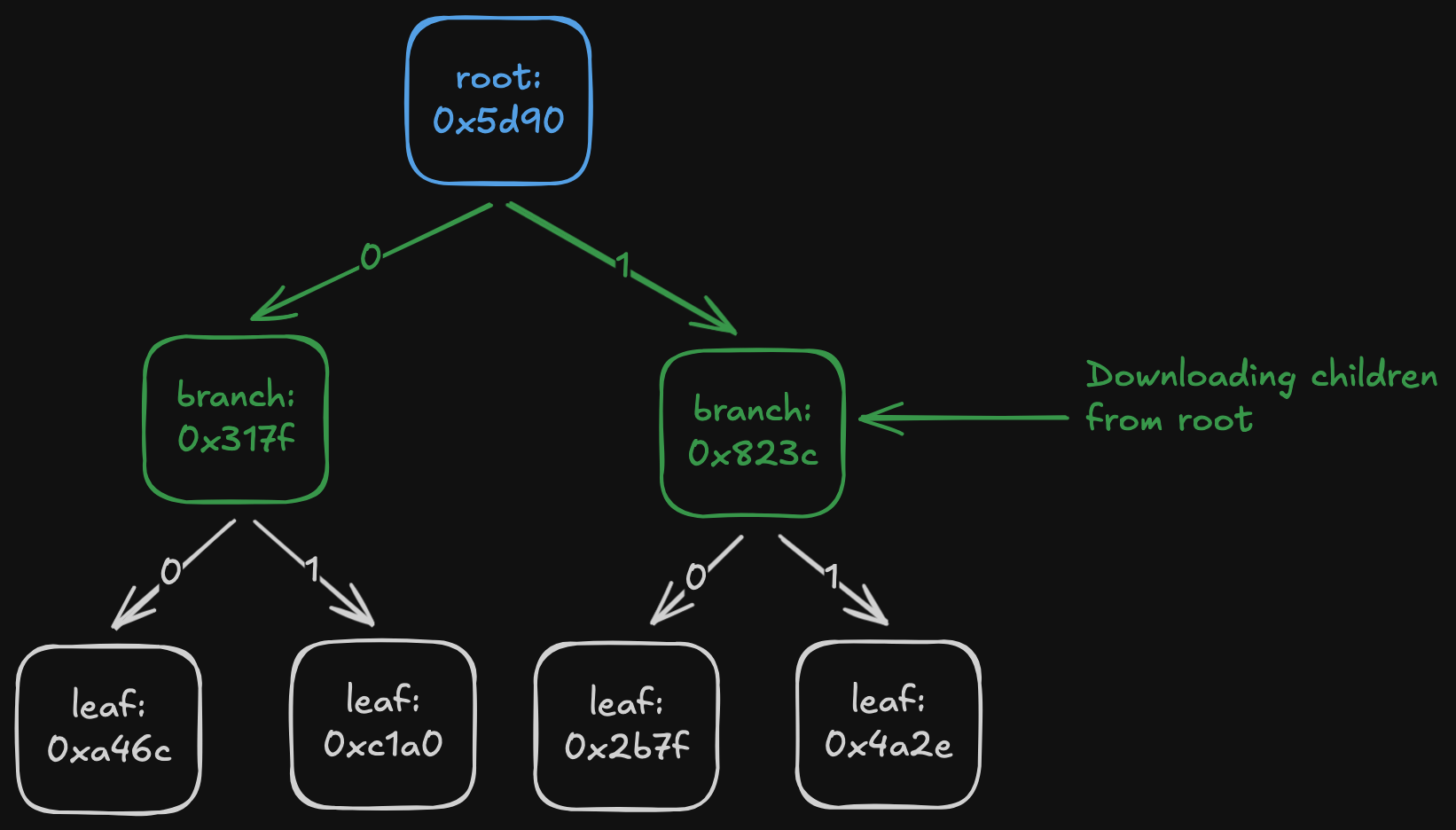

For the first problem: once peers stop serving nodes for a given root1, we stop fast-syncing, update the pivot, and restart the process. The naïve approach would be to download the new root and recursively fetch all its children, checking each time whether they already exist in the DB.

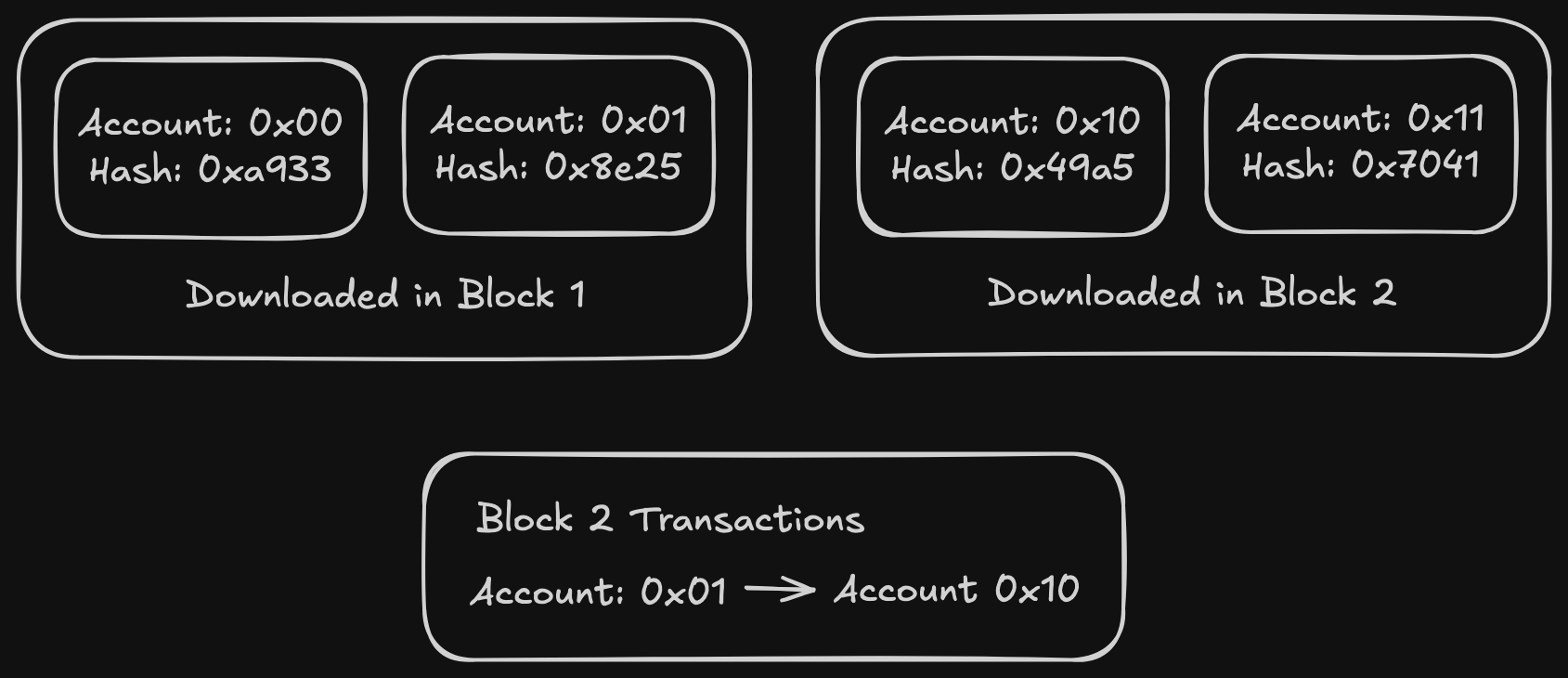

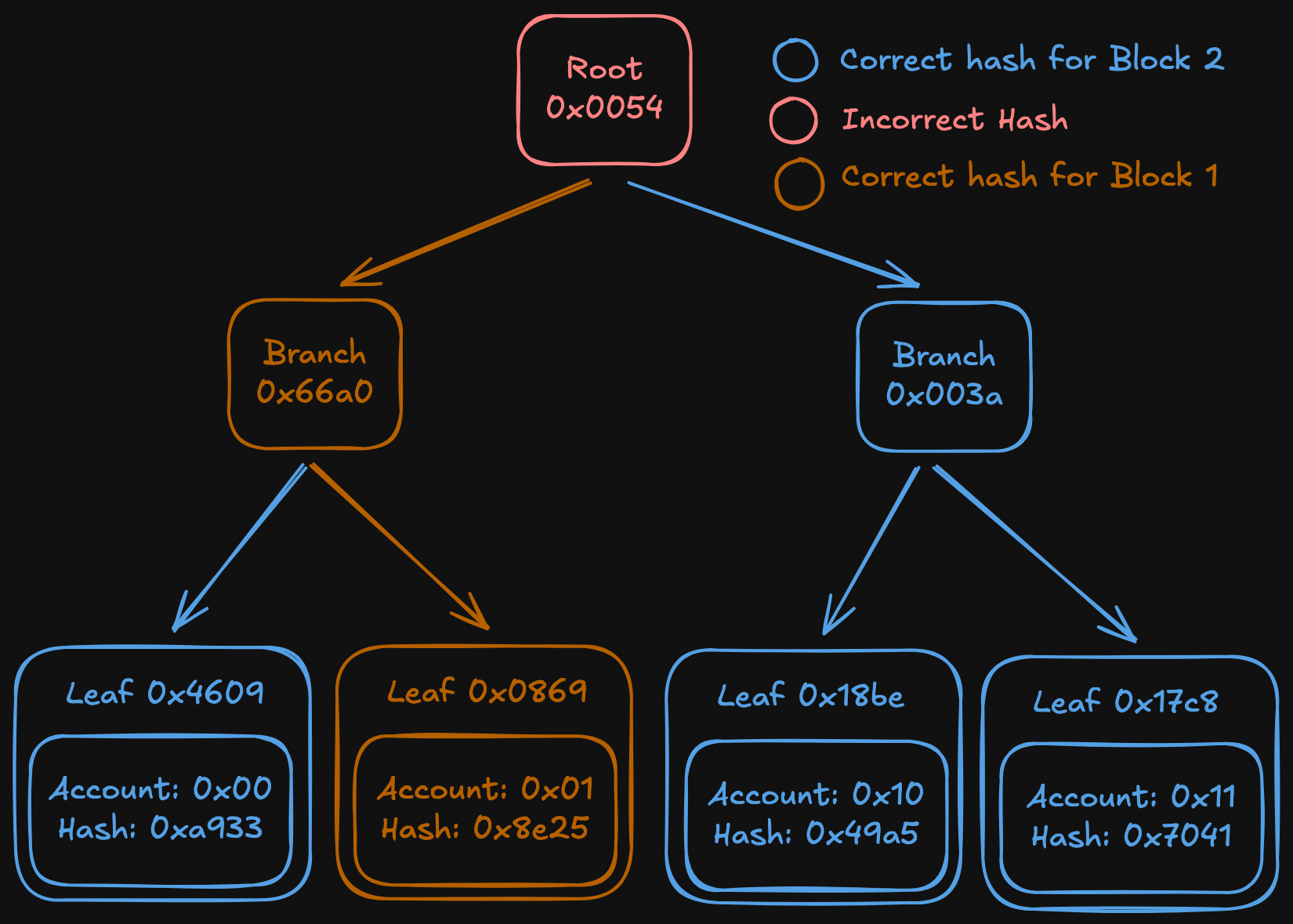

Example of a possible state after stopping fast sync due to staleness.

In the example, even if we find that node { hash: 0x317f, path: 0 } is correct, we still need to check all its children in the DB (in this case none are present).

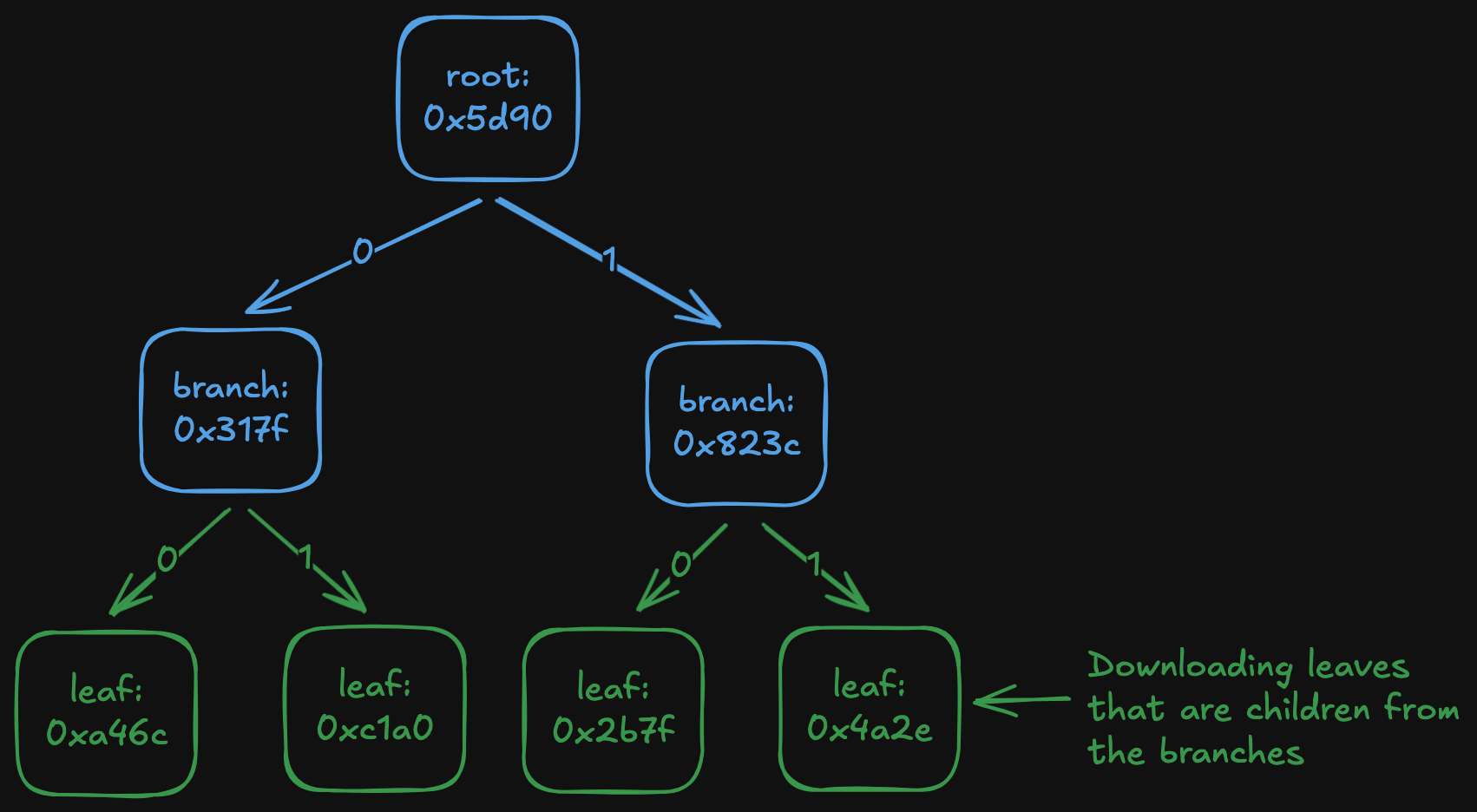

To solve the second problem, we introduce an optimization, which is called the "Membatch"2. This allows us to maintain a new invariant:

If a node is present in the DB, then that node and all its children must be present.

This removes the need to explore entire subtrees: when walking down the trie, if a node is in the DB, the whole subtree can be skipped.

To maintain this invariant, we do the following:

- When we get a new node, we don't immediately store it in the database. We keep track of the amount of every node's children that are not yet in the database. As long as it's not zero, we keep it in a separate in-memory structure "Membatch" instead of on the db.

- When a node has all of its children in the db, we commit it and recursively go up the tree to see if its parent needs to be committed, etc.

- When the nodes are written to the database, all its parents are deleted from the db, which preserves the subtree invariant. Because lower down nodes are always written first, we never delete a valid node.

Example of a possible state after stopping fast sync due to staleness with membatch.

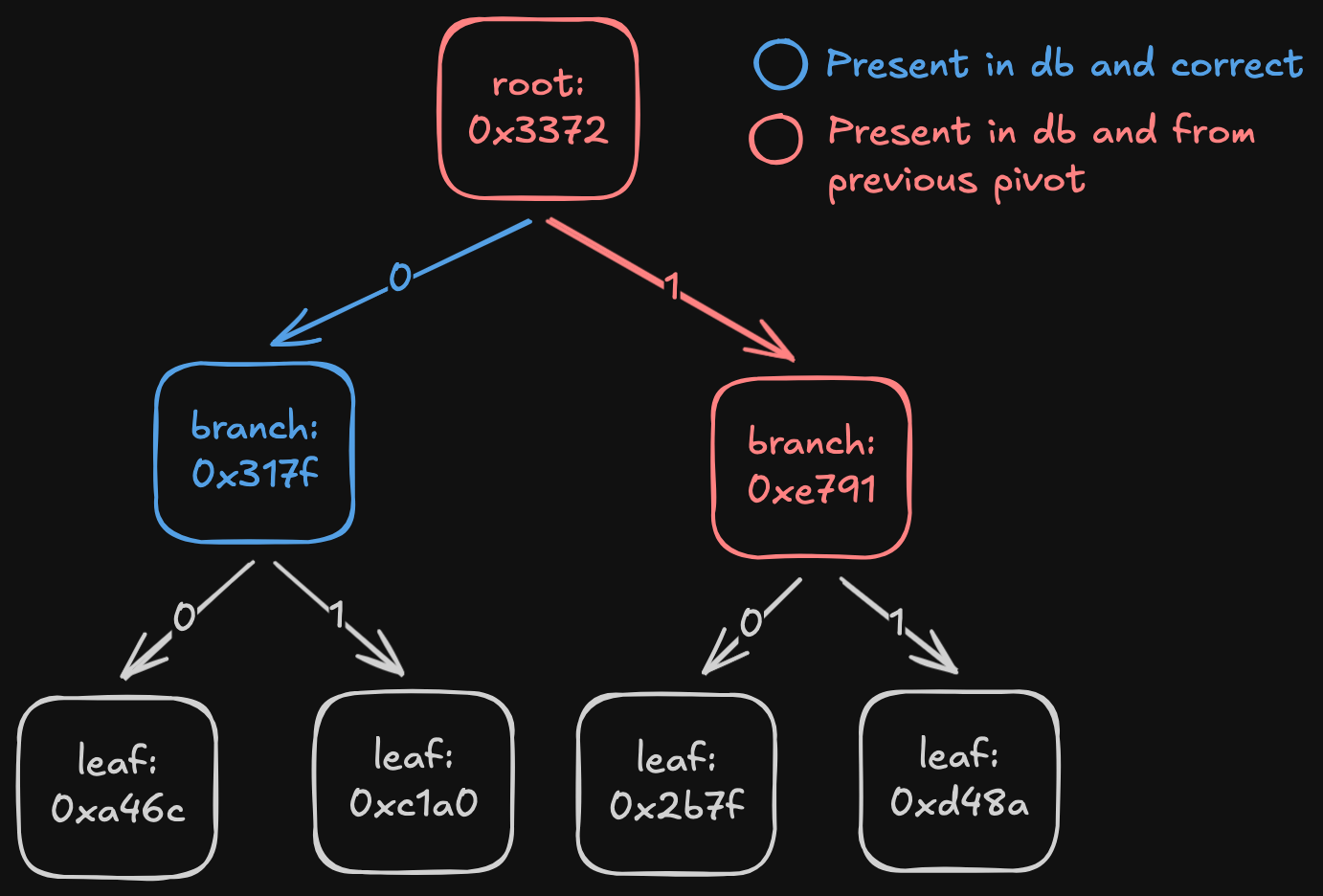

Speeding up: Snap-Sync

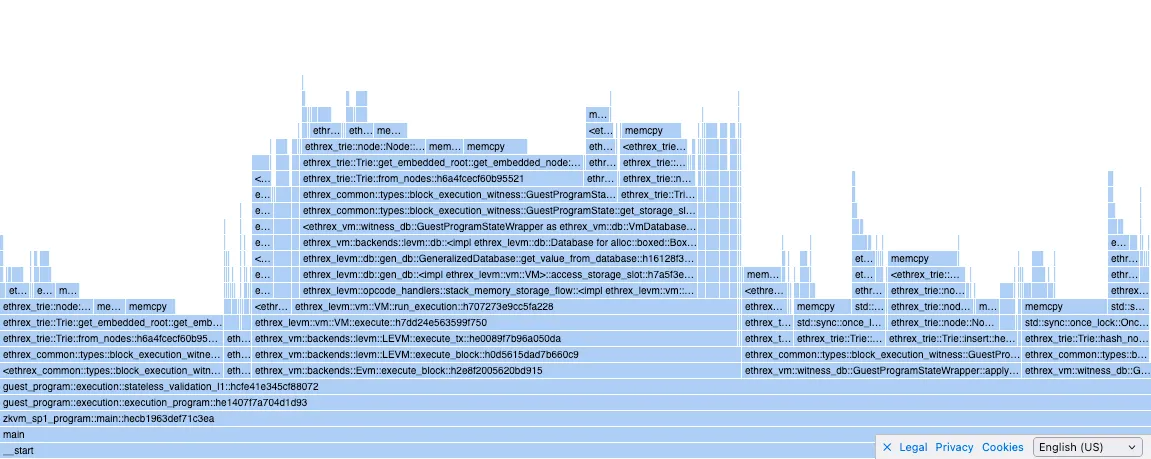

Fast-sync is slow (ethrex fast-sync of a fresh “hoodi” state takes ~45 minutes—4.5× slower than snap-sync). To accelerate it, we use a key property of fast-sync:

Fast-sync can “heal” any partial trie that obeys the invariant, even if that trie is not consistent with any real on-chain state.

Snap sync exploits this by:

- downloading only the leaves (the accounts and storage slots) from any recent state

- assembling a trie from these leaves

- running fast-sync (“healing”) to repair it into a consistent trie. In our code, we call the fast-sync step "healing".

Example run:

- We download the 4 accounts in the state trie from two different blocks

- We rebuild the trie

- We run the healing algorithm and get a correct tree at the end of it

This method alone provides up to a 4-5 times boost in performance, as computing the trie is way faster than downloading it.

Implementation

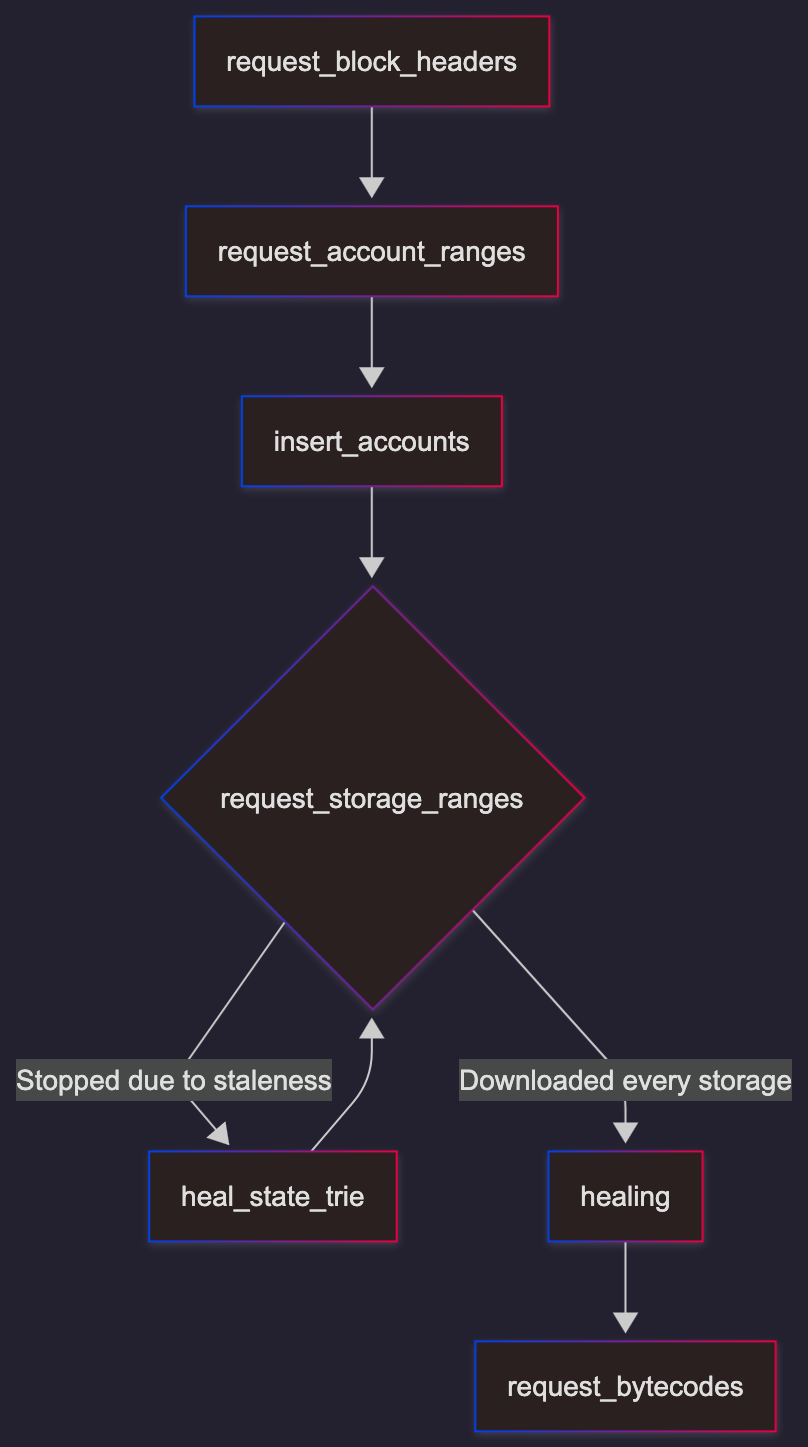

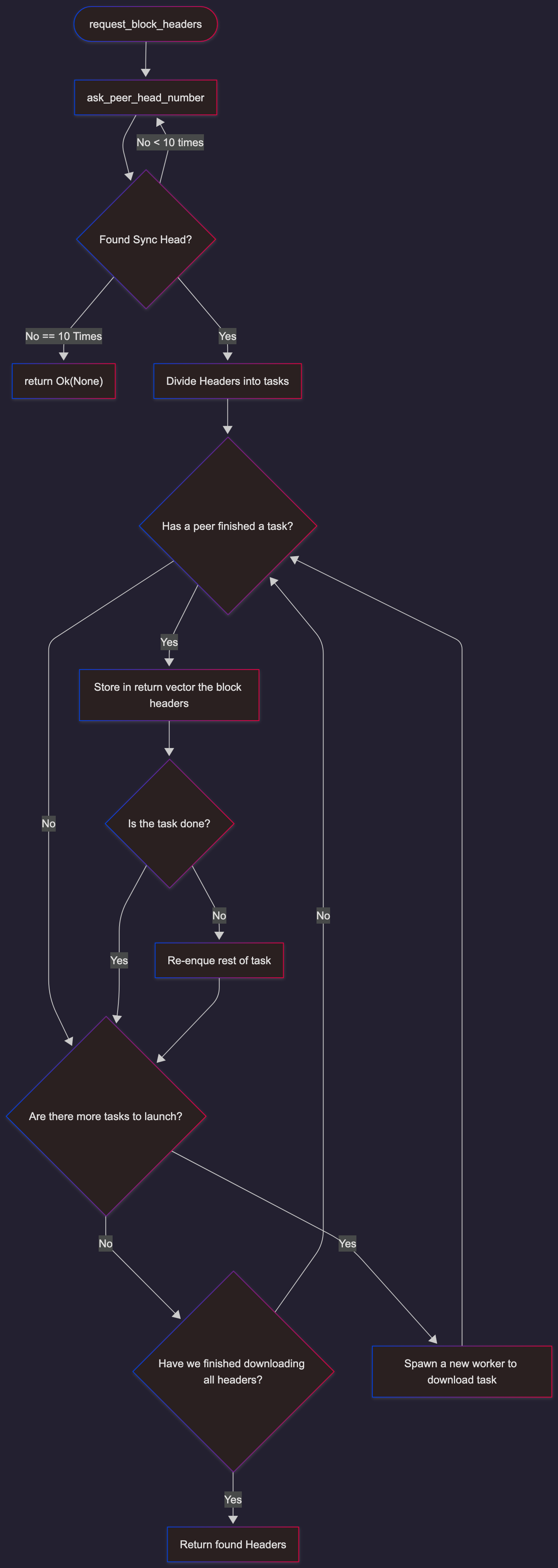

Generalized Flowchart of snapsync

Flags

When developing snap sync there are flags to take into account that are used only for testing:

-

If the

SKIP_START_SNAP_SYNCenvironment variable is set and isn't empty, it will skip the step of downloading the leaves and will immediately begin healing. This simulates the behaviour of fast-sync. -

If debug assertions are on, the program will validate that the entire state and storage tries are valid by traversing the entire trie and recomputing the roots. If any is found to be wrong, it will print an error and exit the program. This is used for debugging purposes; a validation error here means that there is 100% a bug in snap sync.

-

--syncmode [full, default:snap]which defines what kind of sync we use. Full is executing each block, and isn't possible on a fresh sync for mainnet and sepolia.

File Structure

The sync module is a component of the ethrex-p2p crate, found in crates/networking/p2p folder. The main sync functions are found in:

crates/networking/p2p/sync.rscrates/networking/p2p/peer_handler.rscrates/networking/p2p/sync/state_healing.rscrates/networking/p2p/sync/storage_healing.rscrates/networking/p2p/sync/code_collector.rs

Syncer and Sync Modes

The struct that handles the needed handles for syncing is the Syncer, and it has a variable to indicate if the snap mode is enabled. The Sync Modes are defined in sync.rs as follows.